Current Projects

3D Targets for Evaluating Fingerprint Readers

Learn more

3D Targets for Evaluating Fingerprint Readers

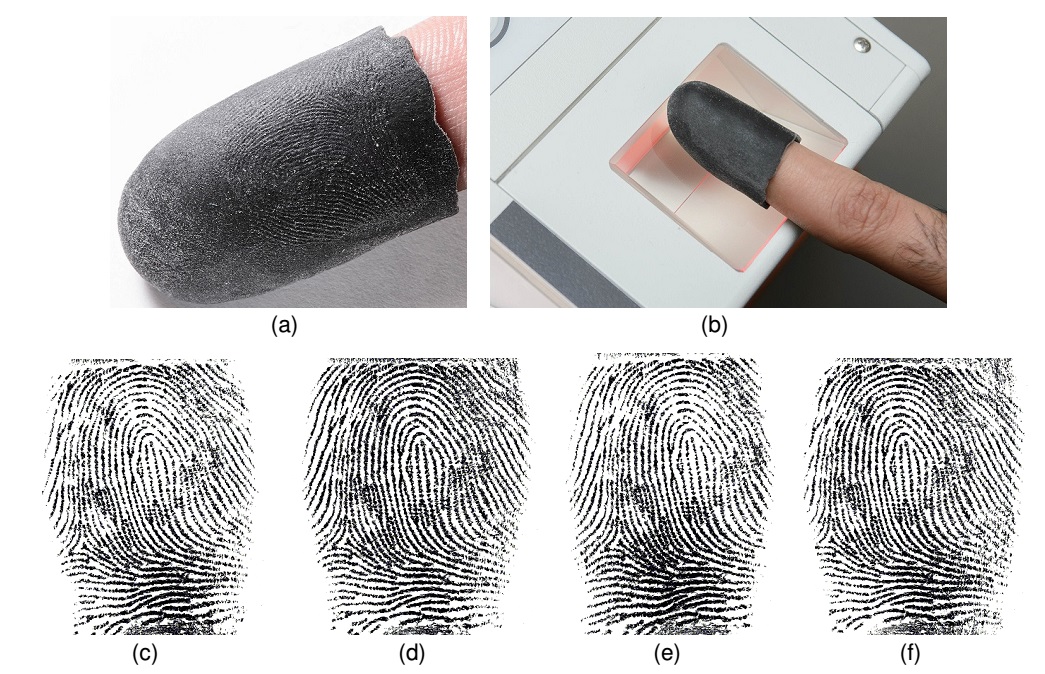

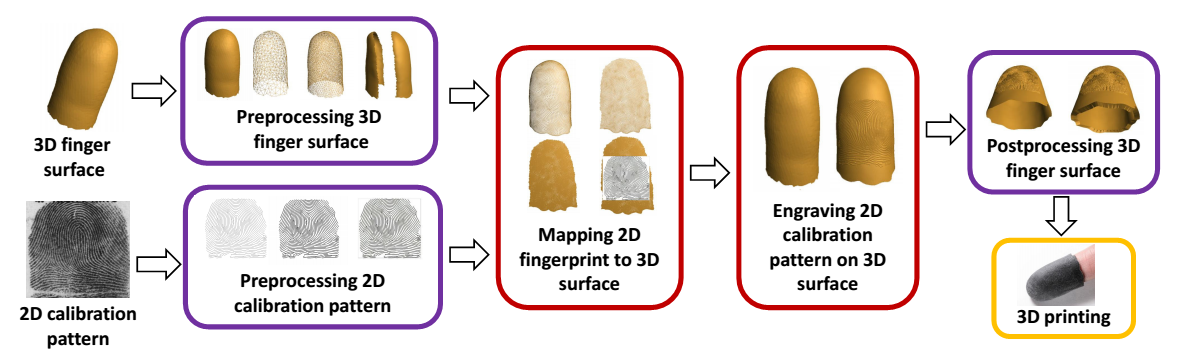

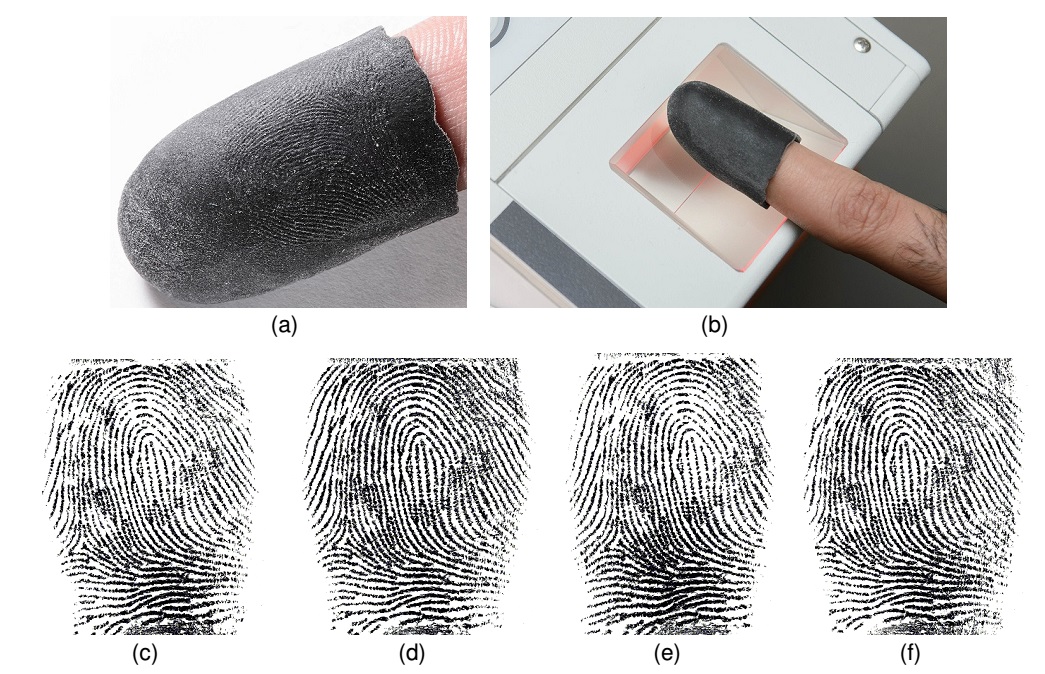

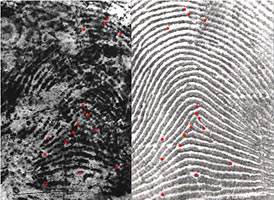

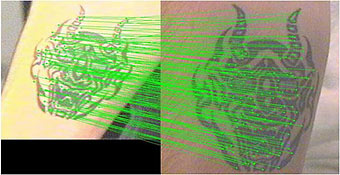

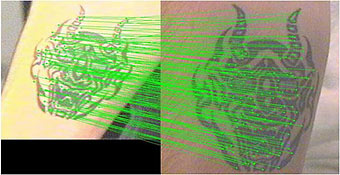

Calibration of imaging systems typically involves the use of specially designed objects with known properties, called calibration targets. Standard calibration targets are, in general, used for calibrating the imaging pathway of fingerprint readers. However, there is no standard method for evaluating fingerprint readers in the operational setting where variations in finger placement by the user are encountered. The goal of this research is to design 3D targets for repeatable operational evaluation of fingerprint readers. 2D calibration patterns with known characteristics (e.g. sinusoidal gratings of pre-specified orientation and frequency, synthetic fingerprints with known singular points and minutiae) are projected onto a generic 3D finger surface to create electronic 3D targets (see Fig. 1). A state-of-the-art 3D printer (Stratasys Objet350 Connex) is used to fabricate the 3D targets with material similar in hardness and elasticity to the human finger skin. Our experimental results show that the (i) fabricated 3D targets can be imaged using three different (500/1000 ppi) commercial optical fingerprint readers, (ii) salient features in the 2D calibration patterns are preserved during the synthesis and fabrication of 3D targets, and (iii) intra-class variability between multiple impressions of the 3D targets captured using the optical fingerprint readers does not degrade the recognition accuracy. We also conduct experiments to demonstrate that the fabricated 3D targets can be used for operational evaluation of optical fingerprint readers (see Fig. 2).

Relevant Publication(s)

1. S. S. Arora, K. Cao, A. K. Jain and N. G. Paulter Jr., "3D Targets for Evaluating Fingerprint Readers", MSU Technical Report, MSU-CSE-15-3, February 23, 2015. [pdf]

2. S. S. Arora, K. Cao, A. K. Jain and N. G. Paulter Jr., "3D Fingerprint Phantoms", 22nd International Conference on Pattern Recognition (ICPR), Stockolm, Sweden, August 24-28, 2014. [pdf][video]

3. S. S. Arora, K. Cao, A. K. Jain and N. G. Paulter Jr., "3D Fingerprint Phantoms", MSU Technical Report, MSU-CSE-13-12, December 24, 2013. [pdf][video]

A Longitudinal Study of Automatic Face Recognition

Learn more

A Longitudinal Study of Automatic Face Recognition

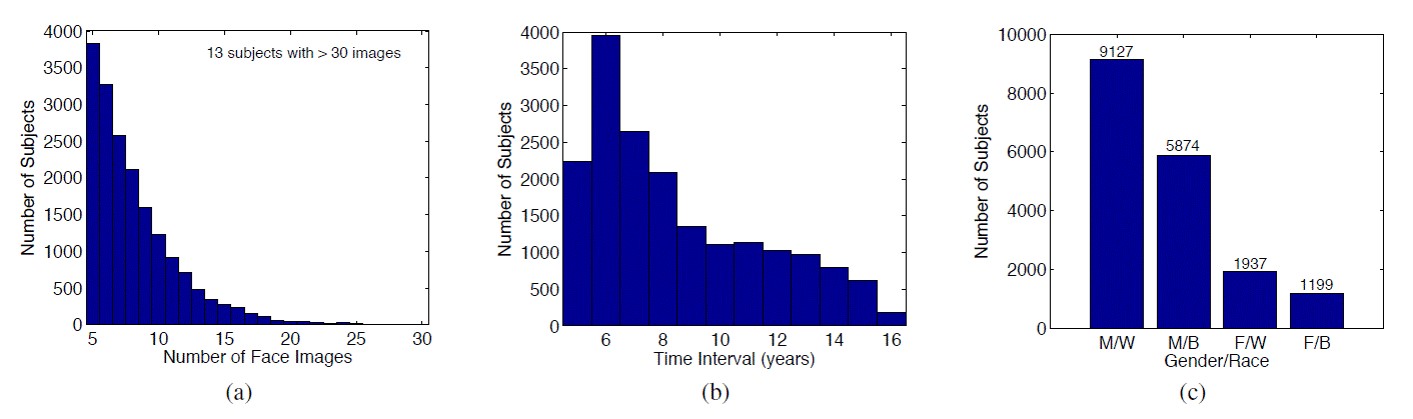

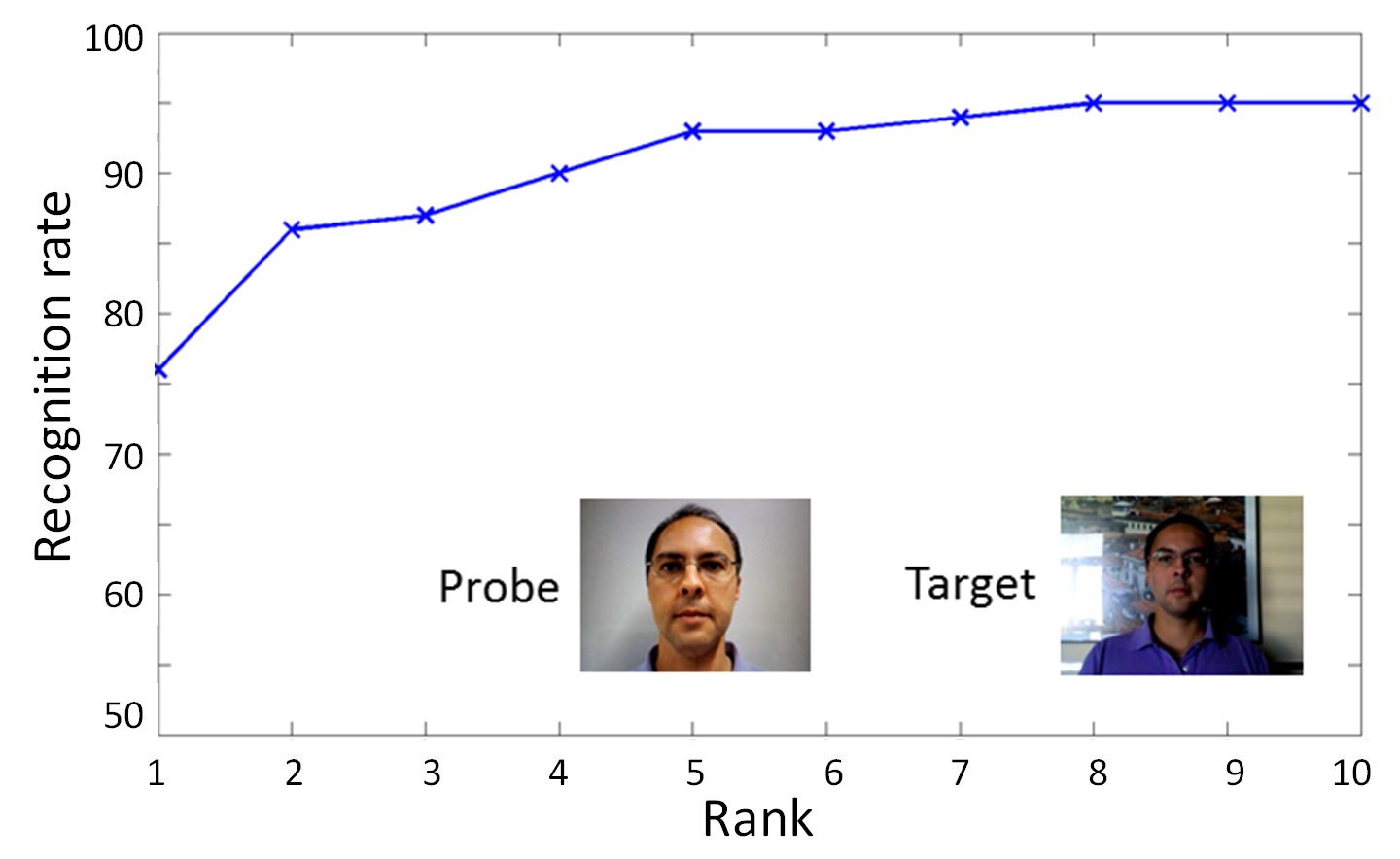

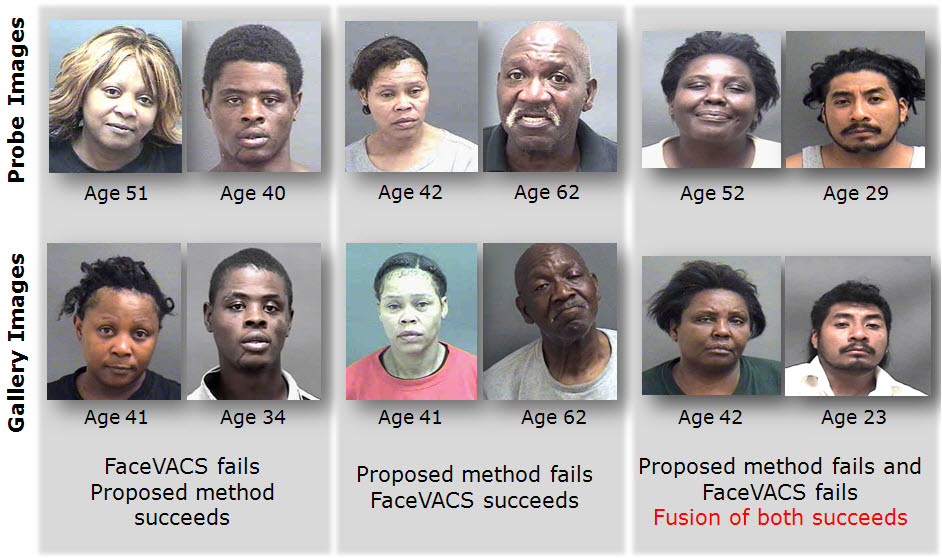

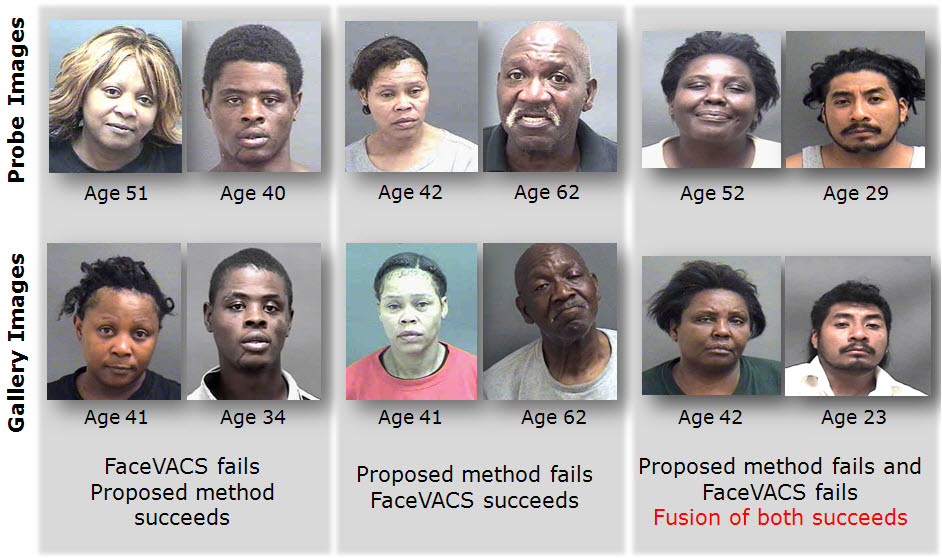

Changes in human facial characteristics over time are inevitable. Hence, it is not surprising that face matching accuracy decreases with increasing elapsed time between two face images of the same person. With the deployment of automatic face recognition systems for de-duplication and law enforcement applications, it is crucial that we gain a better understanding of how facial aging due to time lapse affects recognition performance. Some people "age well" while the facial appearance of others changes drastically over time; this disparity mandates a subject-specific aging analysis. In this ongoing project, we use multilevel statistical models on a longitudinal database of 147,784 face images of 18,007 subjects (see Figs. 1 and 2) to quantify how time lapse affects match scores and recognition accuracy. A similar approach was used by Yoon and Jain [1] for fingerprint analysis and by Grother et al. [2] for iris analysis. Using two state-of-the-art COTS face matchers, we report (i) population-mean temporal trends in genuine scores and (ii) inter-subject variations, (iii) effects of other covariates (gender, race, face quality), and (iv) probability of true acceptance over time. Our preliminary findings suggest that false rejection rates of one of the COTS matchers remain stable up to approximately 10 years time interval.

Relevant Publication(s)

1. L. Best-Rowden and A. K. Jain, "A Longitudinal Study of Automatic Face Recognition", ICB, Phuket, Thailand, May 19-22, 2015. [pdf]

References

[1] S. Yoon and A. K. Jain, "Longitudinal Study of Fingerprint Recognition", Tech. Report MSU-CSE-14-3, Michigan State University, East Lansing, MI, USA, Jun. 2014.

[2] P. Grother, J. R. Matey, E. Tabassi, G.W. Quinn, and M. Chumakov. IREX VI: Temporal Stability of Iris Recognition Accuracy. NIST Interagency Report 7948, Jul. 2013.

Biomedical Informatics

Learn more

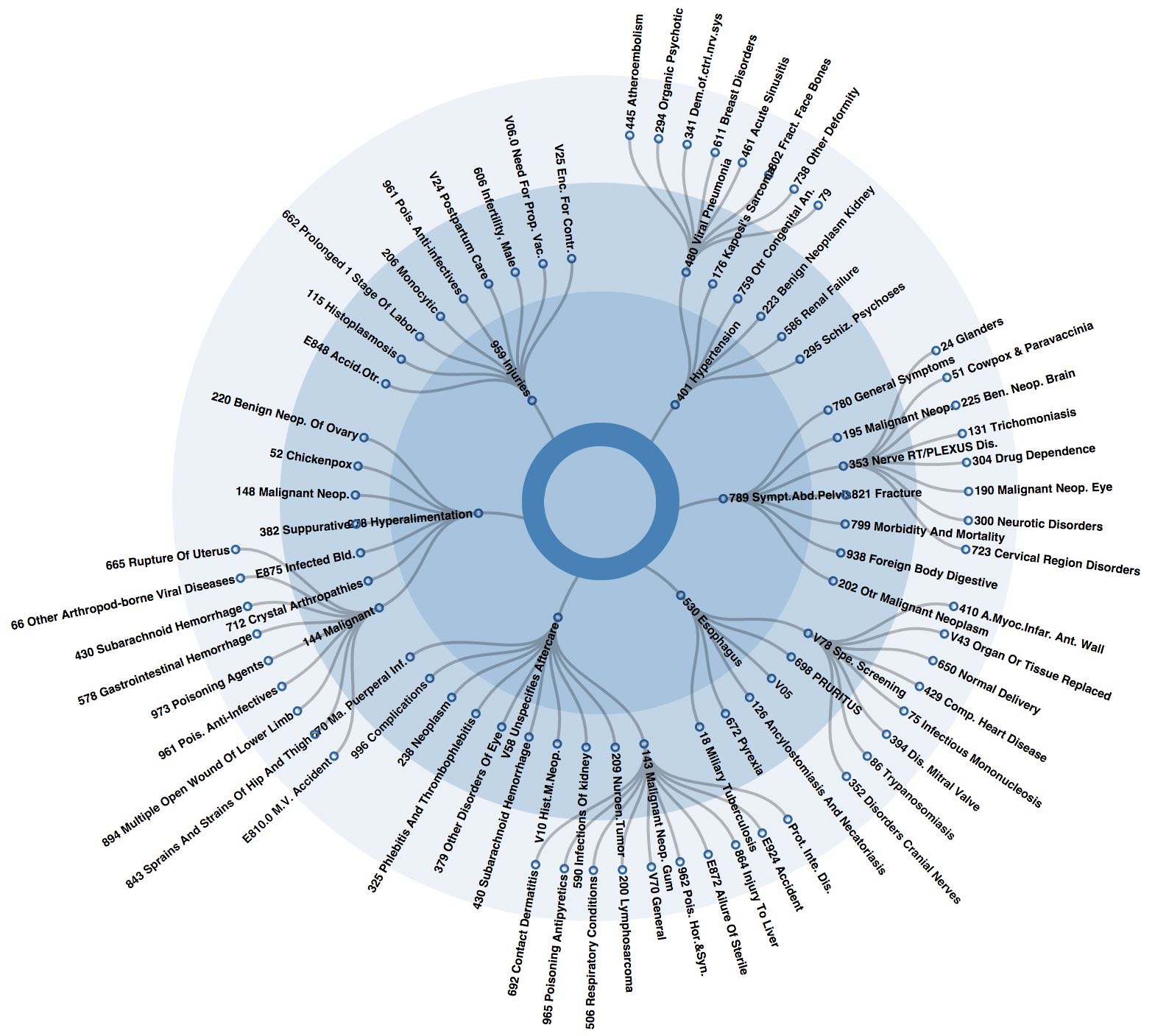

PHENOTREE: Large-Scale Hierarchical Phenotyping via Sparse Principal Component Analysis

Electronic health records (EHRs) capture comprehensive patient information in digital form from a variety of sources. Increasing availability of the EHRs has facilitated development of data and visual analytics tools, which significantly improved many aspects of healthcare analytics, especially clinical decision support and patient care management systems. The fundamental problems facing many medical data analytics tools include study of patient population, exploring complicated interactions among patients and their medical histories, and extracting phenotypes characterizing the patient population. Another challenge of EHRs is the visualization of the data in a way that clinicians can easily comprehend and interpret different patterns, and trends in patient populations. In this paper, we propose PHENOTREE a novel data-driven, hierarchical, and visually interactive phenotyping method via sparse principal component analysis (SPCA). Specifically, given the EHRs of a patient cohort the proposed framework can identify key features characterizing them using SPCA, and subtype those patients according to the identified key medical features. As such, the population can be iteratively refined (subtyped) according to the key medical features, and phenotypes at various levels of granularity can be formed. We provide an efficient SPCA algorithm and a visualization approach to illustrate the relations between diagnoses which helps to interpret the insights of patient populations in the cohort. The benefits of P HENO T REE are demonstrated through its application in the EHRs of two patient cohorts which are public and private datasets with 101,767 and 223,076 patients, respectively. Our evaluations show that PHENOT REE is beneficial to the detection of clinically meaningful hierarchical phenotypes.

Relevant Publication(s)

I. M. Baytas, K. Lin, F. Wang, A. K. Jain and J. Zhou, "PHENOTREE: Large-Scale Hierarchical Phenotyping via Sparse Principal Component Analysis", IEEE Transactions on Multimedia Special Issue on Visualization and Visual Analytics for Multimedia, 2016 (submitted).[pdf]

Stochastic Convex Sparse Principal Component Analysis

Principal Component Analysis (PCA) is a dimensionality reduction and data analysis tool commonly used in many areas. The main idea of PCA is to represent high dimensional data with a few representative components that capture most of the variance present in the data. However, there is an obvious disadvantage of traditional PCA when it is applied to analyze data where interpretability is important. In applications, where the features have some physical meanings, we lose the ability to interpret the principal components extracted by conventional PCA because each principal component is a linear combination of all the original features. For this reason, sparse PCA has been proposed to improve the interpretability of traditional PCA by introducing sparsity to the loading vectors of principal components. The sparse PCA can be formulated as an 1 regularized optimization problem, which can be solved by proximal gradient methods. However, these methods do not scale well because computation of the exact gradient is generally required at each iteration. Stochastic gradient framework addresses this challenge by computing an expected gradient at each iteration. Nevertheless, stochastic approaches typically have low convergence rates due to the high variance. In this paper, we propose a convex sparse principal component analysis (Cvx-SPCA), which leverages a proximal variance reduced stochastic scheme to achieve a geometric convergence rate. We further show that the convergence analysis can be significantly simplified by using a weak condition which allows a broader class of objectives to be applied. The efficiency and effectiveness of the proposed method are demonstrated on a large scale electronic medical record cohort.

Relevant Publication(s)

I. M. Baytas, K. Lin, F. Wang, A. K. Jain and J. Zhou, "Stochastic Convex Sparse Principal Component Analysis", To appear in EURASIP Journal on Bioinformatics and Systems Biology, 2016.[pdf]

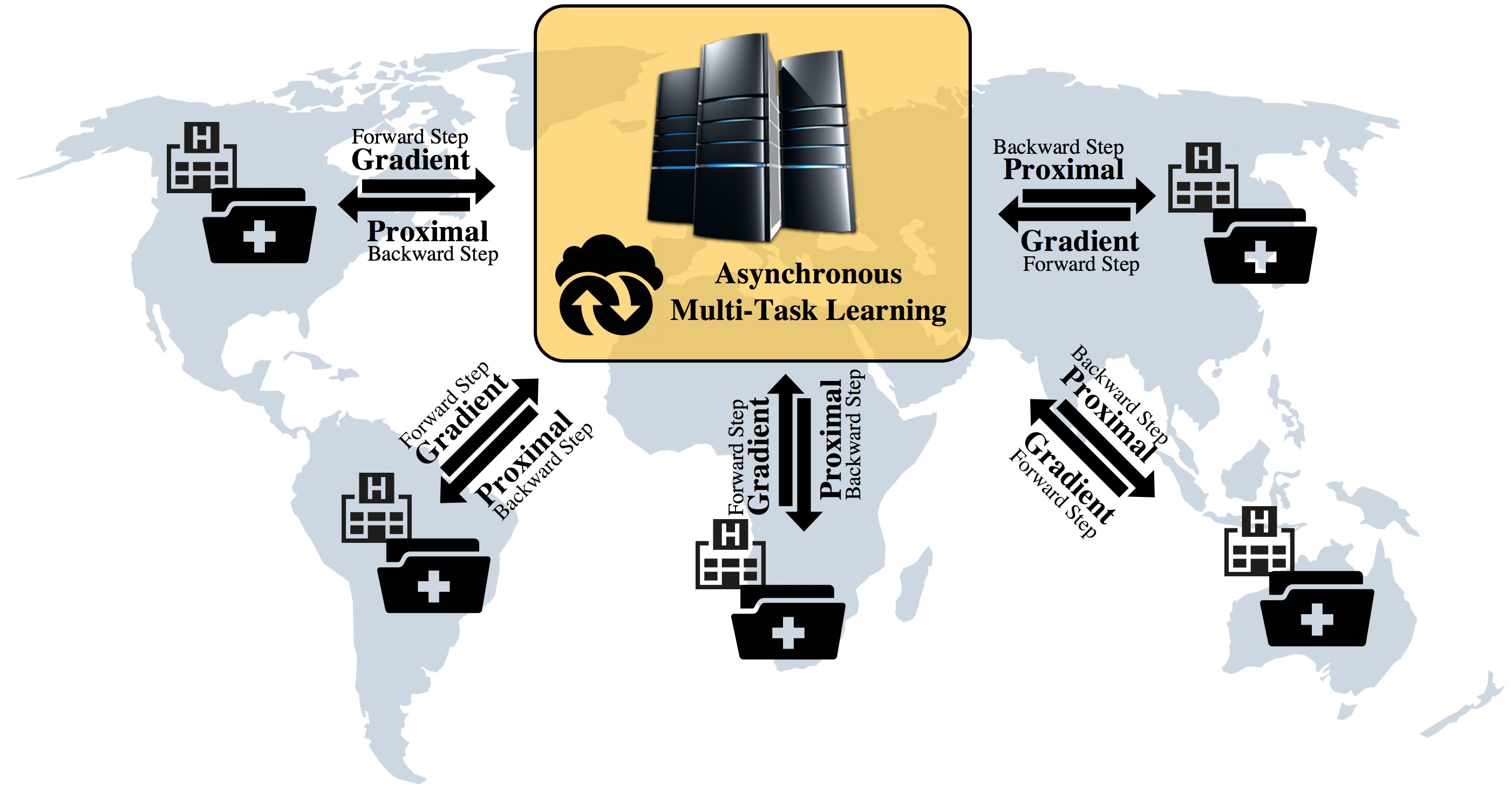

Distributed Asynchronous Multi-Task Learning

Learn more

Distributed Asynchronous Multi-Task Learning

Real-world machine learning applications involve many learning tasks that are inter-related. For example, in the healthcare domain, we need to learn a predictive model of a certain disease for many hospitals. The models for each hospital can be different because of the inherent differences in the distributions of the patient populations. However, the models are also closely related because of the nature of the learning tasks namely modeling the same disease. By simultaneously learning all the tasks, the multi-task learning (MTL) paradigm performs inductive knowledge transfer among tasks to improve the generalization performance of all tasks involved. When datasets for the learning tasks are stored in different locations, it may not always be feasible to move the data to provide a data-centralized computing environment, due to various practical issues such as high data volume and data privacy. This has posed a huge challenge to existing MTL algorithms. In this paper, we propose a principled MTL framework for distributed and asynchronous optimization. In our framework, the gradient update does not depend on and hence does not require waiting for the gradient information to be collected from all the tasks, making it especially attractive when the communication delay is too high for some task nodes. Empirical studies on both synthetic and real-world datasets demonstrate the efficiency and effectiveness of the proposed framework.

Relevant Publication(s)

I. M. Baytas, M. Yan, A. K. Jain, J. Zhou, "Asynchronous Multi-Task Learning", To appear in IEEE International Conference on Data Mining (ICDM) 2016.[pdf]

Face Image Clustering

Learn more

Face Image Clustering

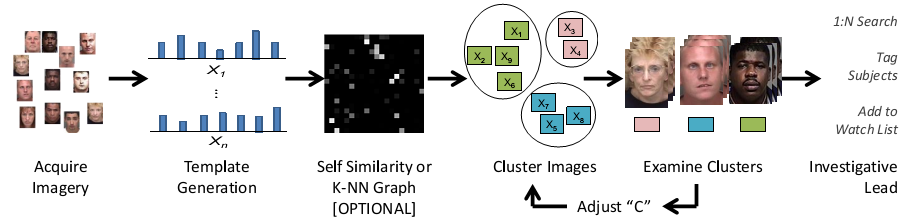

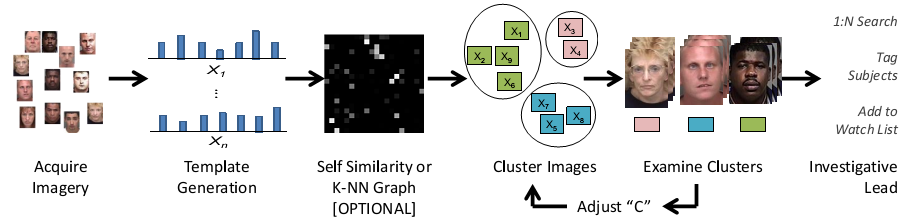

Investigations that require the exploitation of large volumes of face imagery are increasingly common in current forensic scenarios (e.g., Boston Marathon bombing), but effective solutions for triaging such imagery (i.e., low importance, moderate importance, and of critical interest) are not available in the literature. General issues for investigators in these scenarios are a lack of systems that can scale to volumes of images over 100K, and a lack of established methods for clustering the face images into the unknown number of persons of interest contained. As such, we explore best practices for clustering large sets of face images into large numbers of clusters as a method of reducing the volume of data to be investigated by forensic analysts (Fig. 1).

Relevant Publication(s)

1. C. Otto, B. Klare, and A. K. Jain, "An Efficient Approach for Clustering Face Images", ICB, Phuket, Thailand, May 19-22, 2015.[pdf]

Face Liveness

Learn more

Face Liveness

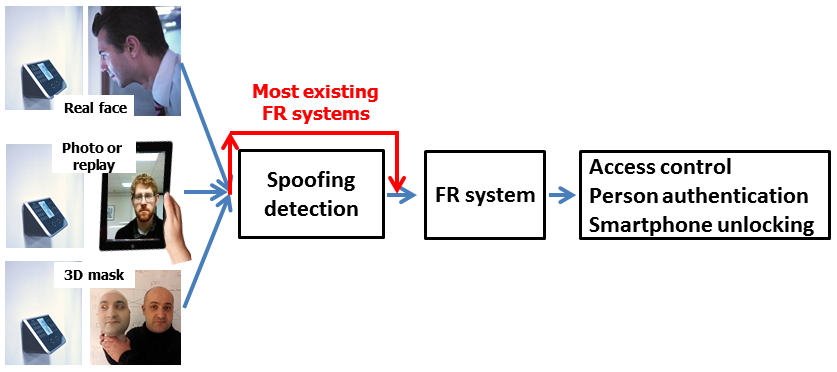

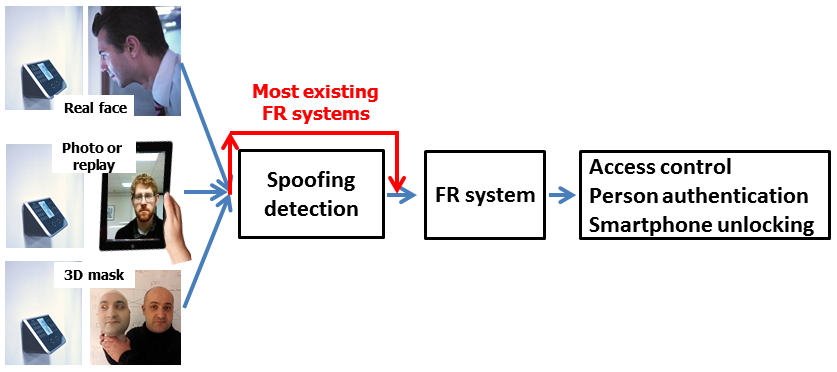

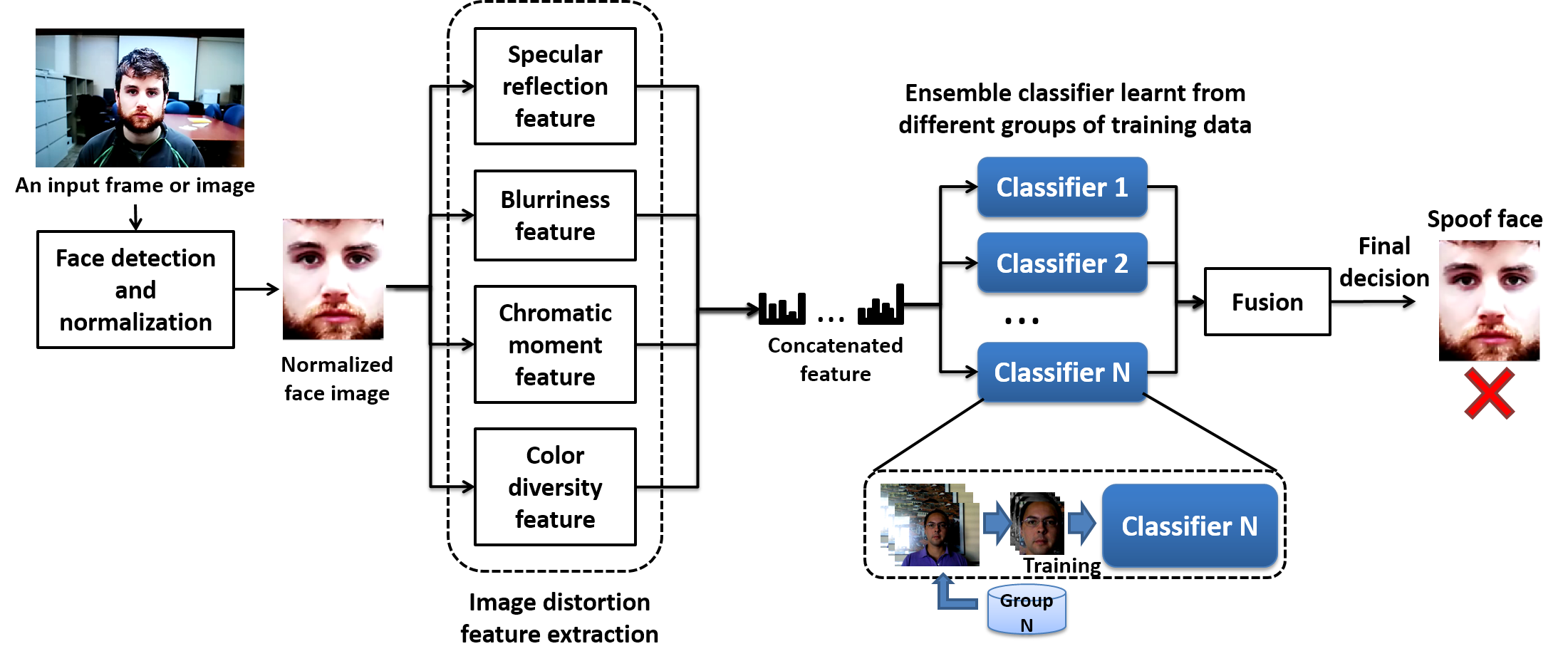

The popularity of face recognition has raised concerns about face spoof attacks (also known as biometric sensor presentation attacks), where a photo or video of an authorized person's face could be used to gain access to facilities or services. While a number of face spoof detection techniques have been proposed, their generalization ability has not been adequately addressed. In this project, we propose a new method for face liveness detection based on image quality analysis from 2D face spoof attacks (printed photos, video replays, etc.). Compared with widely used approaches based on face texture and motion analysis, the image quality analysis does not require face detection. Additionally, spoof detection based on image quality analysis should have better generalization ability than methods based on face texture or motion analysis. We also collect a face spoof database, MSU Mobile Face Spoofing Database (MSU MFSD), using two mobile devices (Google Nexus 5 and MacBook Air) with three types of spoof attacks (printed photo, replayed video with iPhone 5S, and iPad Air). The cross-database performance of individual face liveness detection methods is an important topic in this project.

In [1] we used image distortion analysis (IDA) to classify whether an input to a facial recognition system is of a genuine user or a spoof user. Four different features (specular reflection, blurriness, chromatic moment, and color diversity) are extracted to form the IDA feature vector. Experiments show our classifier based on IDA generalizes well, achieving state of the state performance, especially in cross-database testing, which portrays real world scenarios.

In [2] we address the problem of face spoof detection of replay attacks based on the analysis of aliasing in spoof videos. We analyze the moiré pattern aliasing that commonly appears during the recapture of video or photo replays on a screen in different channels (red, green, blue and grayscale) and regions (the whole face, detected face, facial component between the nose and chin). Multi-scale LBP and DSIFT features are used to represent the characteristics of moiré patterns. Experimental results on Idiap replay-attack and CASIA databases, as well as on a database we collected, Replay Attacks for Smartphones (RAFS), shows that the proposed approach is very effective in face spoof detection for both cross-database and intra-database testing scenarios. An Android application based on [2] has been developed and shows potential to be used for real world application.

Relevant Publication(s)

[1] D. Wen, H. Han, and A. K. Jain, "Face Spoof Detection with Image Distortion Analysis", IEEE Transactions on Information Forensics and Security, Vol. 10, No. 4, pp.746-761, April 2015. [pdf] [database].

[2] K. Patel, H. Han, and A. K. Jain, "Live Face Video vs. Spoof Face Video: Use of Moire Patterns to Detect Replay Video Attacks", ICB, Phuket, Thailand, May 19-22, 2015. [pdf]

Face Retrieval

Learn more

Face Retrieval

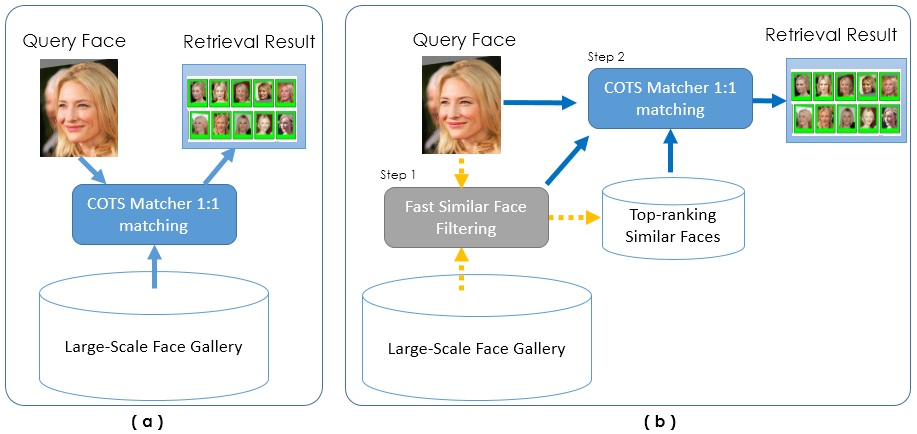

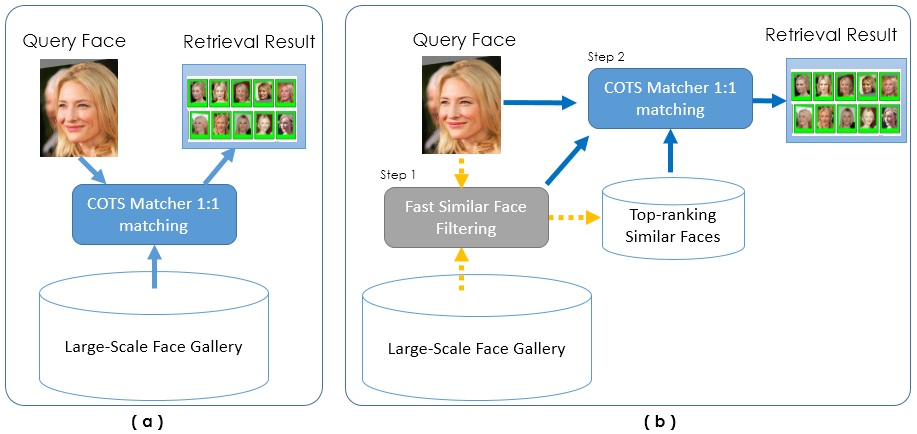

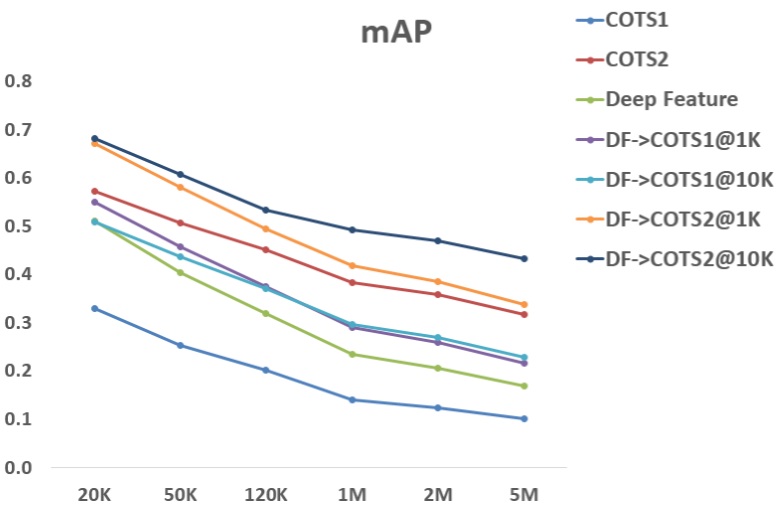

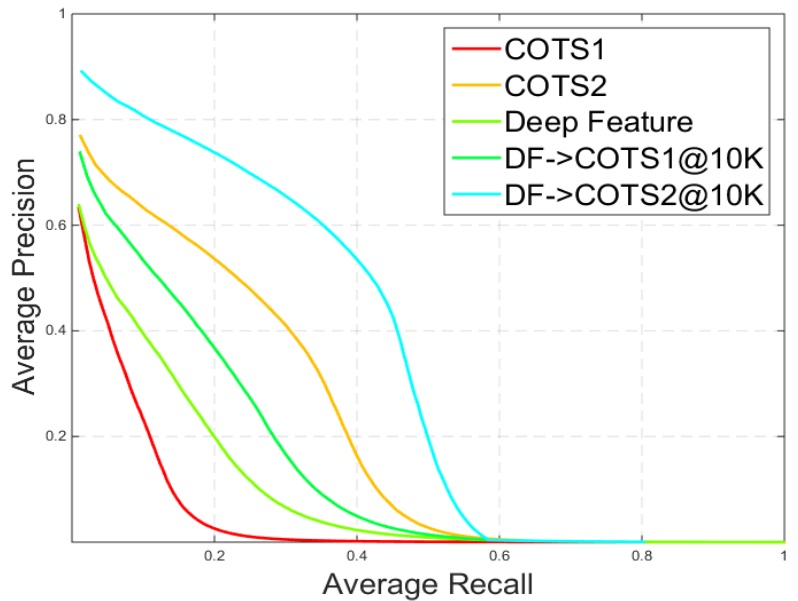

Face retrieval is an enabling technology for many applications, including automatic face annotation, deduplication, and surveillance. In this project, we aim to build a face retrieval system which combines a k-nearest neighbor search procedure with a commercial-off-the-shelf (COTS) matcher in a cascaded manner (shown in Fig. 1). In particular, given a query face image, we first pre-filter the gallery set and find the top-k most similar faces for the query image by using deep facial features that are learned offline with a deep convolutional neural network. The top-k most similar faces are then re-ranked based on score-level fusion of the similarities between deep features and the COTS matcher.

The proposed retrieval system can address performance and scalability simultaneously. The retrieval system is evaluated on a large-scale web face database (5 million images). The gallery set is constructed with four different web face databases (Pubfig, LFW, WLFDB, and WebFace). All overlapping subjects are removed. The proposed system is compared with two commercial face matchers, and achieves the best performance.

| SOURCE | #SUBJECT | #IMAGES | |

|---|---|---|---|

| QUERY SET | Pubfig | 100 | 2,000 |

| GALLERY SET | Pubfig | 100 | 36,061 |

| LFW | 5,446 | 11,173 | |

| WLFDB | 3,000 | 100,575 | |

| WebFaces | 4,881,187 | ||

| TOTAL | 5,000,000 |

| Pubfig[1] | LFW[2] | WLFDB[3] | Web Faces |

|---|---|---|---|

|

|

|

|

Relevant Publication(s)

1. Dayong Wang and A. K. Jain, "Face Retriever: Pre-filtering the Gallery via Deep Neural Net", ICB, Phuket, Thailand, May 19-22, 2015. [pdf]

References

[1] Neeraj Kumar, Alexander C. Berg, Peter N. Belhumeur, and Shree K. Nayar, "Attribute and Simile Classifiers for Face Verification,", International Conference on Computer Vision (ICCV), 2009.[web page]

[2] Gary B. Huang, Manu Ramesh, Tamara Berg, and Erik Learned-Miller. "Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments", University of Massachusetts, Amherst, Technical Report 07-49, October, 2007. [web page]

[3] Wang, Dayong, Hoi, Steven C. H., He, Ying, Zhu, Jianke, Mei, Tao and Luo, Jiebo, Retrieval-Based Face Annotation by Weak Label Regularized Local Coordinate Coding, IEEE Trans. Pattern Anal. Mach. Intell., Vol. 36, No. 3, p.550–563, mar, 2014.[web page]

Image Capture and Persistence of Fingerprint Recognition for Infants and Toddlers

Learn more

Image Capture and Persistence of Fingerprint Recognition for Infants and Toddlers

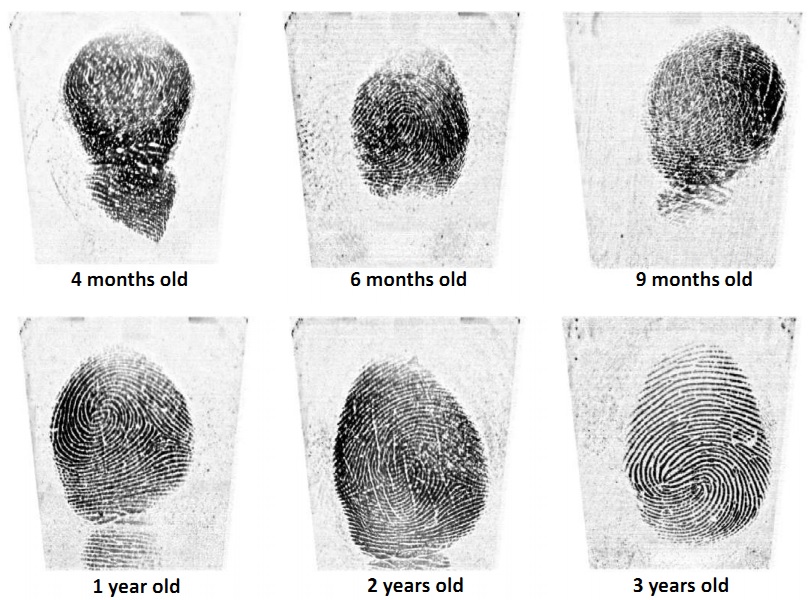

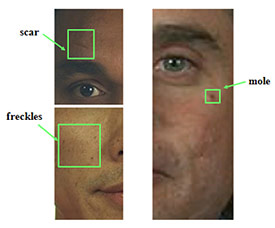

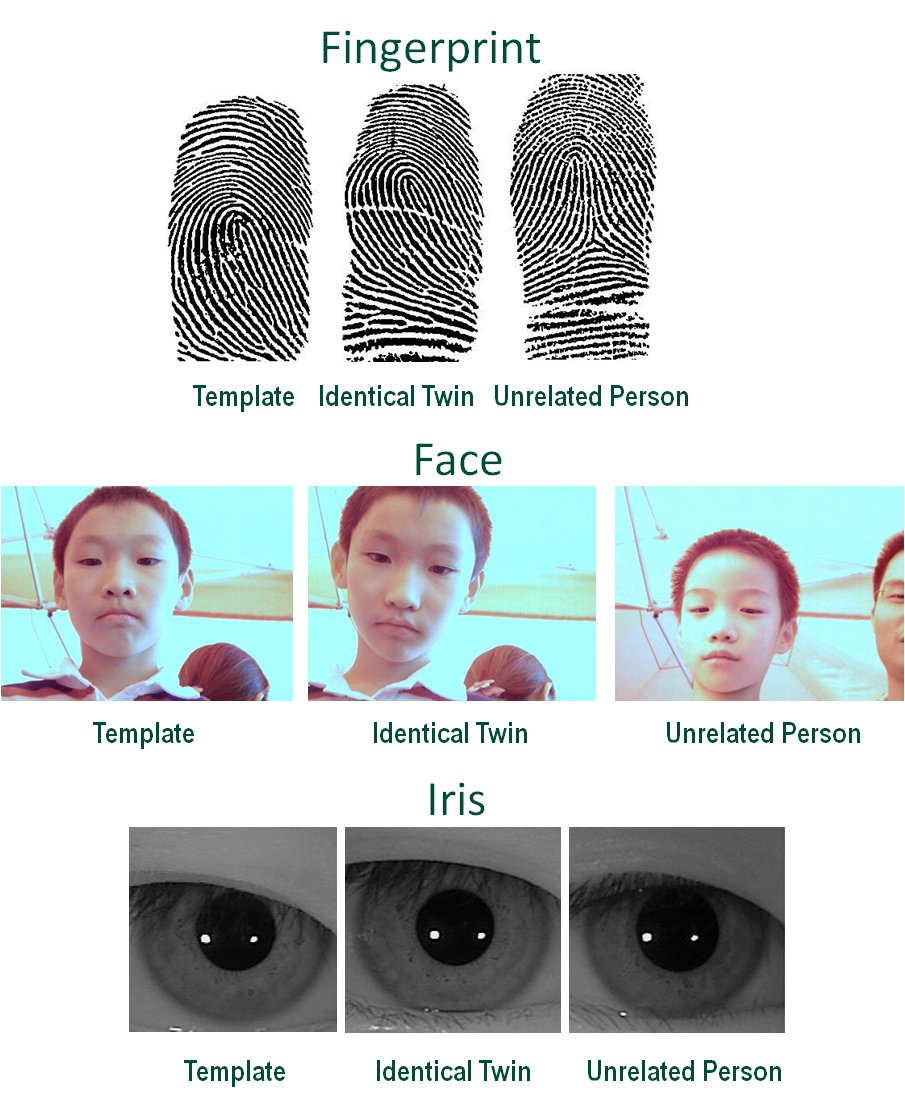

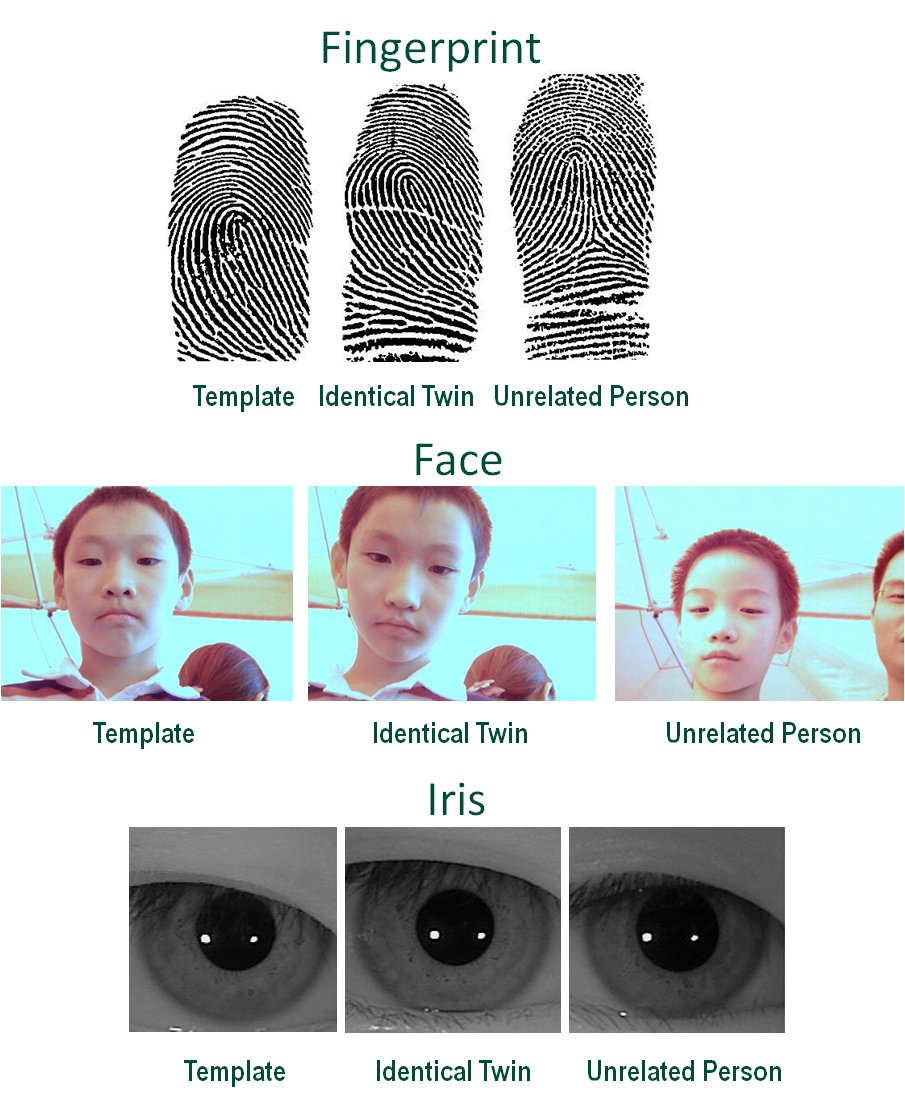

With a number of emerging applications requiring biometric recognition of children (e.g., tracking child vaccination schedules, identifying missing children and preventing newborn baby swaps in hospitals), investigating image capture of biometric traits of children and temporal stability of biometric recognition accuracy for children is important. Image capture and persistence of recognition accuracy of three of the most commonly used biometric traits (fingerprints, face and iris) has been investigated for adults. However, they have not been studied systematically for children in the age group of 0-4 years. Given that very young children are often uncooperative and do not comprehend or follow instructions, in our opinion, among all biometric modalities, fingerprints are the most viable for recognizing children. This is primarily because it is easier to capture fingerprints of young children compared to other biometric traits, e.g., iris, where a child needs to stare directly towards the camera to initiate iris capture. In this research, our goal is to investigate image capture and persistence of fingerprint recognition for children in the age group of 0-4 years. Based on preliminary results obtained for the data collected in the first phase of our study (see Figs. 1 and 2), use of fingerprints for recognition of 0-4 year-old children appears promising.

Relevant Publication(s)

1. A. K. Jain, S. S. Arora, L. Best-Rowden, K. Cao, P. S. Sudhish and A. Bhatnagar, "Biometrics for Child Vaccination and Welfare: Persistence of Fingerprint Recognition for Infants and Toddlers", MSU Technical Report, MSU-CSE-15-7, April 15, 2015. [pdf]

2. A. K. Jain, K. Cao and S. S. Arora, "Recognizing Infants and Toddlers using Fingerprints: Increasing the Vaccination Coverage", IJCB, Clearwater, Florida, USA, Sept. 29-Oct. 2, 2014. [pdf]

Similarity Learning via Adaptive Regression and Its Application to Image Retrieval

Learn more

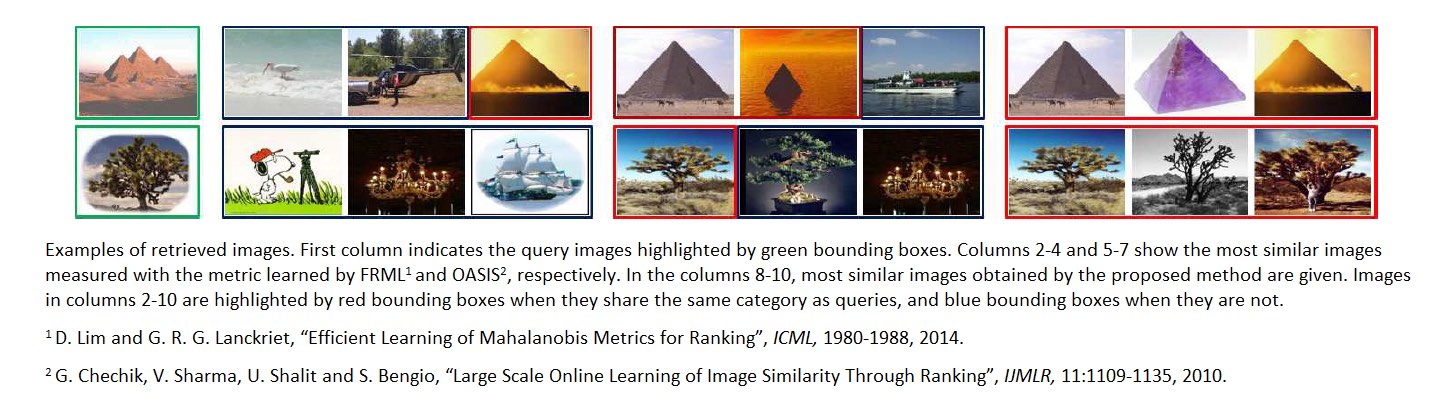

Similarity Learning via Adaptive Regression and Its Application to Image Retrieval

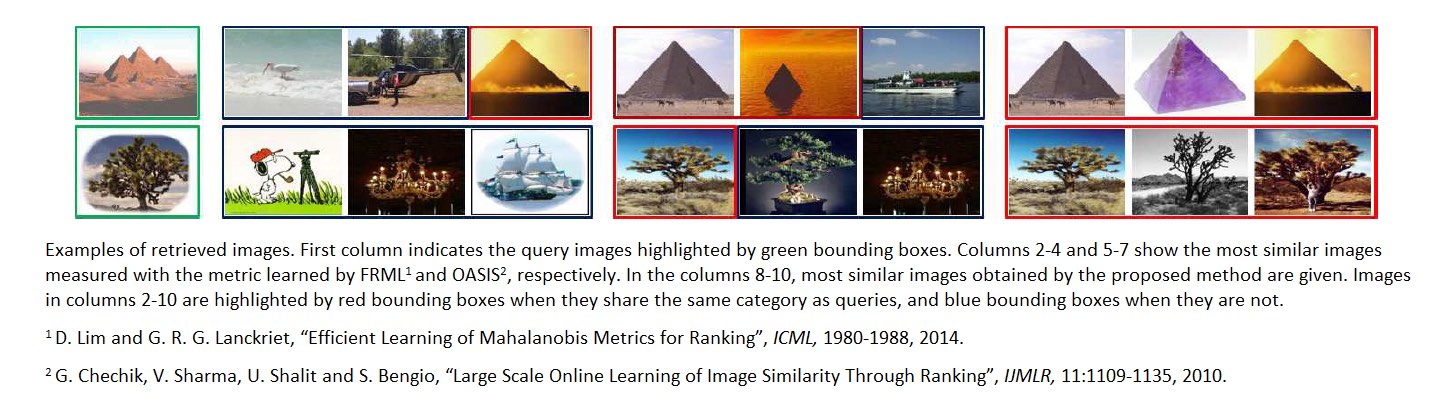

We study the problem of similarity learning and its application to image retrieval with large-scale data. The similarity between pairs of images can be measured by the distances between their high dimensional representations, and the problem of learning the appropriate similarity is often addressed by distance metric learning. However, distance metric learning requires the learned metric to be a PSD matrix, which is computational expensive and not necessary for retrieval ranking problem. On the other hand, the bilinear model is shown to be more flexible for large-scale image retrieval task, hence, we adopt it to learn a matrix for estimating pairwise similarities under the regression framework. By adaptively updating the target matrix in regression, we can mimic the hinge loss, which is more appropriate for similarity learning problem. Although the regression problem can have the closed-form solution, the computational cost can be very expensive. The computational challenges come from two aspects: the number of images can be very large and image features have high dimensionality. We address the first challenge by compressing the data by a randomized algorithm with the theoretical guarantee. For the high dimensional issue, we address it by taking low rank assumption and applying alternating method to obtain the partial matrix, which has a global optimal solution. Empirical studies on real world image datasets (i.e., Caltech and ImageNet) demonstrate the effectiveness and efficiency of the proposed method.

Relevant Publication(s)

Q. Qian, I.M. Baytas, R. Jin, A.K. Jain, S. Zhu, “Similarity Learning via Adaptive Regression and Its Application to Image Retrieval”, arXiv:1512.01728 [cs.LG], 2015.[pdf]

Completed Projects

This section contains selected project abstracts and related publications. Please visit our publications page for a comprehensive list of projects, patents, books, and dissertations.

Continuous Authentication of Mobile User

Learn more

Continuous Authentication of Mobile User

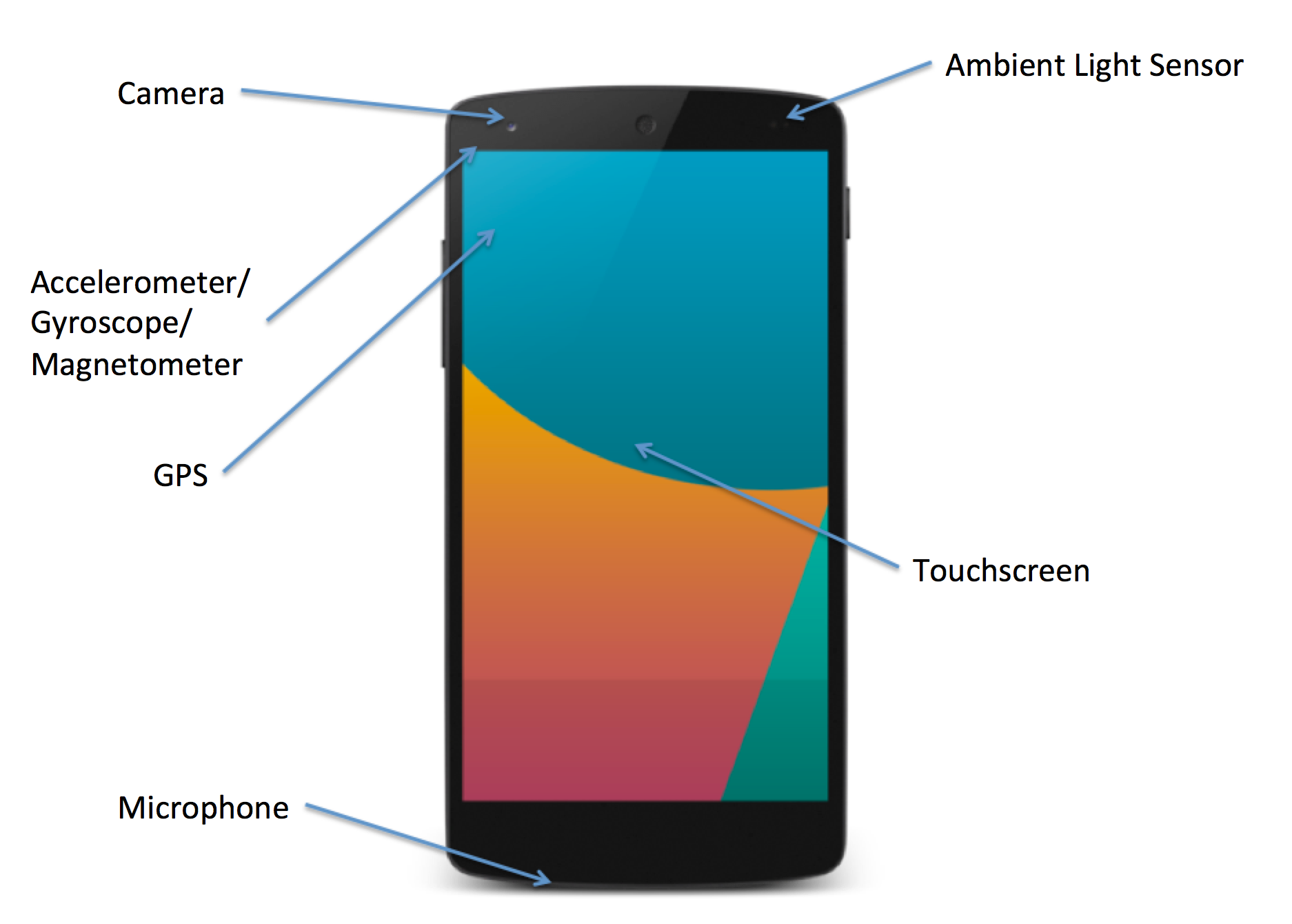

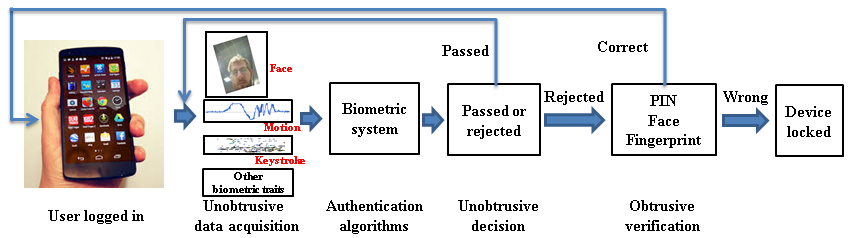

By the end of 2014, there were about 1.7 billion smartphone users worldwide. Mobile devices can carry large amounts of personal data but are often left unsecured. PIN locks are inconvenient to use and thus have seen low adoption (33% of users). While biometrics are beginning to be used for mobile device authentication, they are used only for initial unlock. Mobile devices secured with only login authentication are still vulnerable to theft in an unlocked state. This project studies a face-based continuous authentication system that operates in an unobtrusive manner. We present a methodology for fusing mobile device (unconstrained) face capture with gyroscope, accelerometer, and magnetometer data to correct for camera orientation and, by extension, the orientation of the face image. The project demonstrates (i) improvement of face recognition accuracy from face orientation correction, and (ii) efficacy of the prototype continuous authentication system. Additional face matching algorithms for matching unconstrained face images, and biometric traits such as motion, keystroke, and context will be studied.

Relevant Publication(s)

1. D. Crouse, H. Han, D. Chandra, B. Barbello, and A. K. Jain, "Continuous Authentication of Mobile User: Fusion of Face Image and Inertial Measurement Unit Data", ICB, Phuket, Thailand, May 19-22, 2015. [pdf]

2. K. Niinuma, H. Han, and A. K. Jain, "Automatic Multi-view Face Recognition via 3D Model Based Pose Regularization", BTAS, Washington, D.C., Sept. 29-Oct. 2, 2013. [pdf]

Demographic Attribute Estimation from Face Images

Learn more

Demographic Attribute Estimation from Face Images

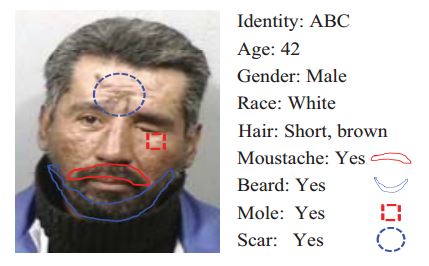

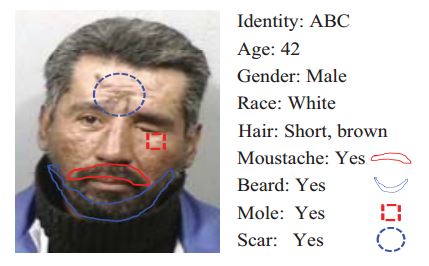

Demographic estimation entails automatic estimation of age, gender and race of a person from his face image, which has many potential applications ranging from forensics to social media.Automatic demographic estimation, particularly age estimation, remains a challenging problem because persons belonging to the same demographic group can be vastly different in their facial appearances due to intrinsic and extrinsic factors. In this work, we present a generic framework (see Fig. 2) for automatic demographic (age, gender and race) estimation. Given a face image, we first extract demographic informative features via a boosting algorithm; we then employ a hierarchical approach consisting of between-group classification and within-group regression. Quality assessment is also developed to identify low-quality face images from which reliable demographic estimates are difficult to obtain. Experimental results on a diverse set of face image databases, FG-NET (1K images), FERET (3K images), MORPH II (75K images), PCSO (100K images), and a subset of LFW (4K images), show that the proposed approach has superior performance compared to the state of the art. We additionally use crowdsourcing to study the ability of human perception to estimate demographics from face images. A side-by-side comparison of the demographic estimates from crowdsourced data and the proposed algorithm provides a number of insights into this challenging problem.

Relevant Publication(s)

1. H. Han, C. Otto, X. Liu, and A. K. Jain. Demographic Estimation from Face Images: Human vs. Machine Performance. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015 (To Appear). [pdf]

2. H. Han, C. Otto, and A. K. Jain. Age Estimation from Face Images: Human vs. Machine Performance. In Proc. 6th IAPR International Conference on Biometrics (ICB), Madrid, Spain, June 4-7, 2013. [pdf]

Distance Metric Learning for High Dimensional Data

Learn more

Distance Metric Learning for High Dimensional Data

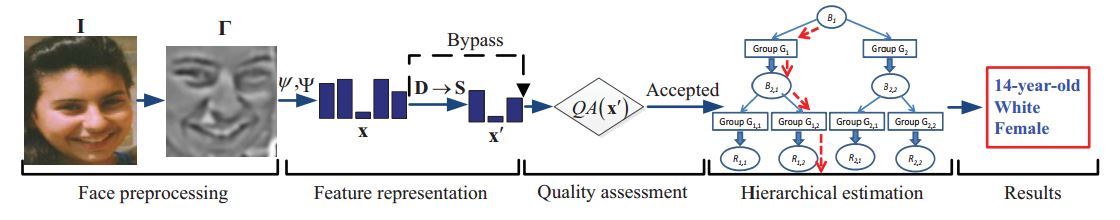

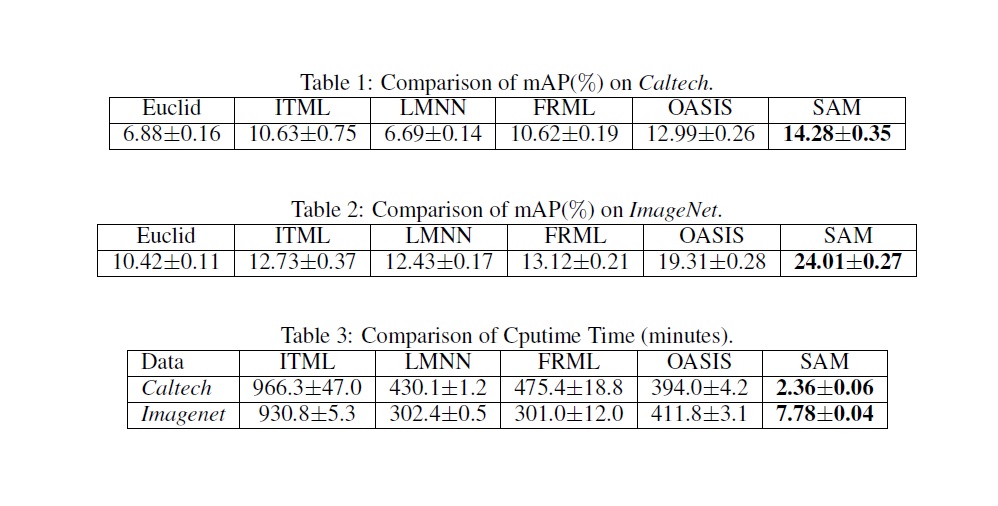

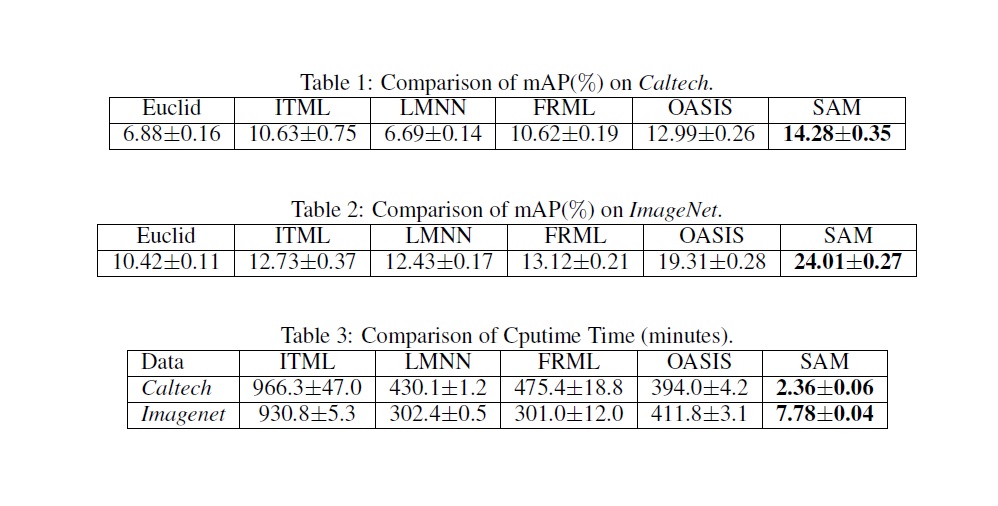

A fundamental problem in machine learning is to assess the similarity or dissimilarity between objects, patterns, and data points. Distance metric learning addresses this fundamental issue by learning a distance metric from a set of training examples such that “similar” instances are close to each other while “dissimilar” instances are separated by a large distance. Distance metric learning has been applied to many tasks in computer vision, face detection and recognition, object tracking and video event detection. Nowadays high dimensional data appears in almost every application domain of machine learning. In order to capture diverse pattern in the visual content of images and temporal correlation among video frames, hundreds of thousands of features are extracted, leading to very high dimensional vector representations. Besides high dimensionality, the amount of data available for learning and mining has grown exponentially in recent years. The computational challenge of high dimensional DML arises from the fact that the number of parameters to be estimated increases quadratically in the number of dimensions. The computational challenge is further complicated by the constraint that the learned distance metric has to be a Positive Semi-Definite (PSD) matrix. To ensure a learned distance metric M to be PSD, we need to perform the eigenvalue decomposition of M, an expensive operation whose cost is cubic in the dimensionality. Another concern with high dimensional DML is the overfitting problem. Again, this is because the number of independent variables in distance metric grows quadratically in dimensionality. This project aims to develop efficient learning algorithms to explicitly address the computational challenges in large-scale high dimensional DML. Analysis for better understanding the generalization properties of regularized empirical minimization for high dimensional DML is also developed. In one of the studies conducted in this project, we developed a similarity learning algorithm [1]. We formulated the similarity learning as a matrix regression problem. High dimensionality problem is addressed by taking low rank assumption and solving partial matrix by alternating method. The large scale problem is tried to be solved my utilizing random projections. In the table, comparisons of mean average precisions and computational times for different metric learning methods and the method developed in our study which is denoted as SAM are given.

Relevant Publication(s)

1. Q. Qian, J. Hu, R. Jin, J. Pei and S. Zhu, “Distance Metric Learning Using Dropout: A Structured Regularization Approach”, Proceedings of 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD'14), 2014.

Facial Recognition of Lemurs

Learn more

Facial Recognition of Lemurs

Lemurs are the world’s most endangered mammals. Analyzing individual lemur movements and interactions among a group of lemurs is essential for tracking the health of lemur populations. Current methods for identifying lemurs are limited to capturing and tagging individuals or visually identifying them via appearance. Tagging individual lemurs is expensive and disruptive to the population both in terms of causing injury and the possibility of group social dynamic shifts. Visual identification requires substantial expertise gained over time and produces results which are difficult to generalize.

Automatic facial recognition of humans is a mature technology and has many uses, from unlocking smartphones to finding suspects in surveillance video. A novel application of this technology, however, is recognizing other mammalian species. By creating a facial detection and recognition application to identify lemurs from images, this project will allow for simple and inexpensive tracking of individual lemurs in their habitat. The presence of distinctive facial features among lemurs allows for accurate recognition by extending and adapting techniques used in human face recognition to the facial anatomy of lemurs.

Relevant Publication(s)

1. Jacobs, RL, Tecot, SR, Klum, S, Crouse, D, Jain, AK (2014) Developing novel face recognition techniques for population assessments and long-term research of threatened lemurs. International Primatological Society XXV Congress, Hanoi, Vietnam.

Kernel-Based Clustering for Big Data

Learn more

Kernel-Based Clustering for Big Data

Kernel-based clustering algorithms achieve better performance on real world data than Euclidean distance-based clustering algorithms. However, kernel-based algorithms pose two important challenges:

- they do not scale sufficiently in terms of run-time and memory complexity, i.e. their complexity is quadratic in the number of data instances, rendering them inefficient for large data sets containing millions of data points, and

- the choice of the kernel function is very critical to the performance of the algorithm.

In this project, we aim at developing efficient schemes to reduce the running time complexity and memory requirements of these clustering algorithms and learn appropriate kernel functions from the data.

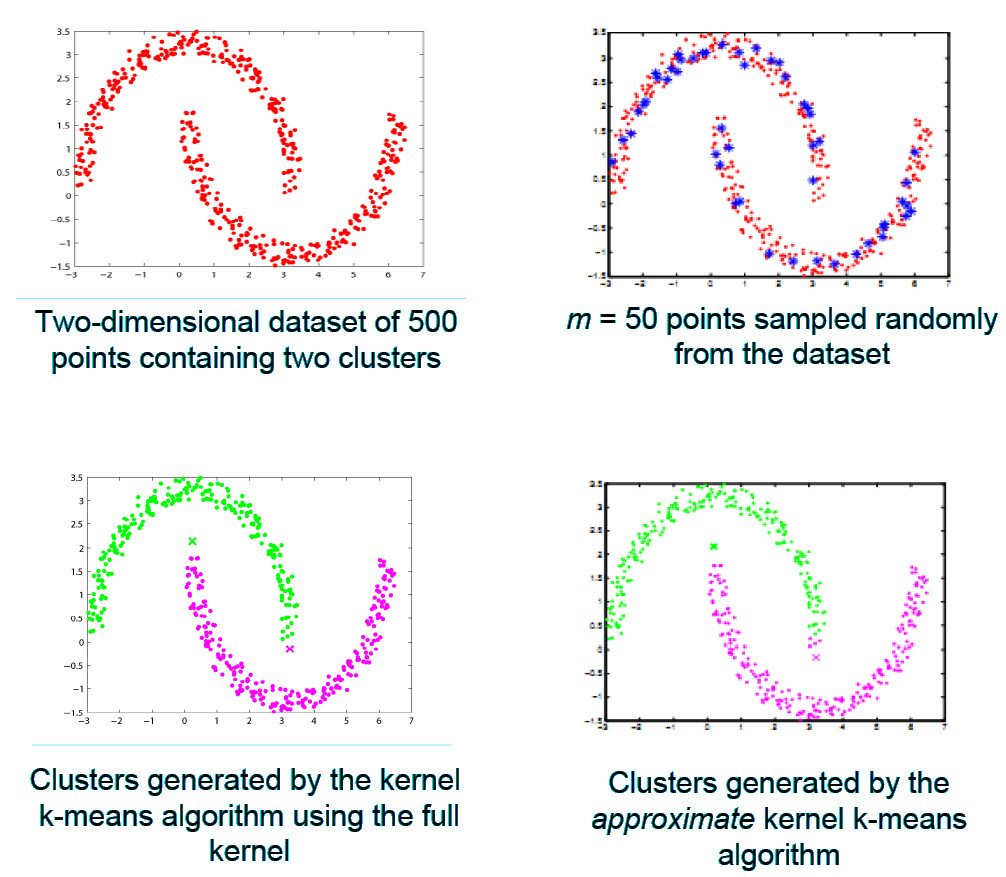

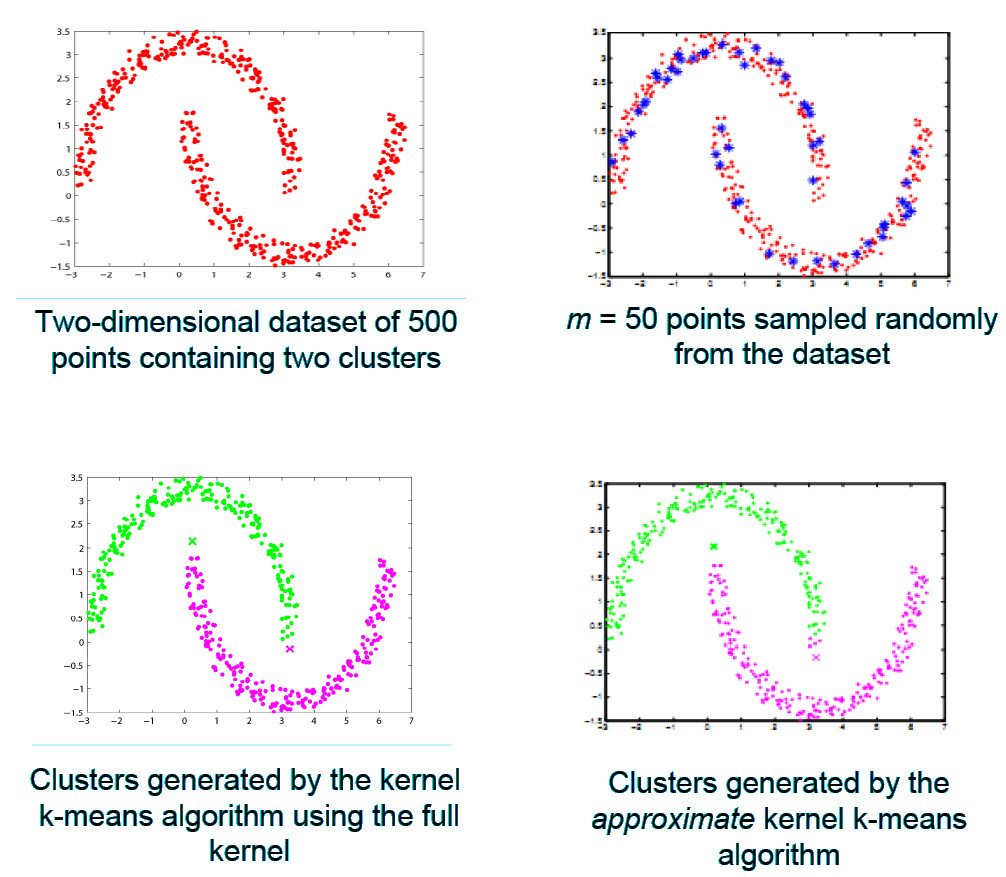

Approximate Kernel Clustering

In [1] and [2], we focus on reducing the complexity of the kernel k-means algorithm. The kernel k-means algorithm has quadratic runtime and memory complexity and cannot cluster more than a few thousands of points efficiently. In [1], we developed an algorithm called Approximate Kernel k-means which replaces the kernel similarity matrix in kernel k-means with a low rank approximate matrix, obtained by randomly sampling a small subset of the data set. The approximate kernel matrix is used to obtain the cluster labels for the data in an iterative manner (see Figure 1). The running time complexity of the approximate kernel k-means algorithm is linear in the number of points in the data set, thereby reducing the time for clustering by orders of magnitude (compared to the kernel k-means algorithm). For instance, the approximate kernel k-means algorithm is able to cluster 80 million images from the Tiny data set in about 8 hours on a single core, whereas kernel k-means requires several weeks to cluster them. The clustering accuracy of the approximate kernel k-means algorithm is close to that of the kernel k-means algorithm, and the difference in accuracy reduces linearly with the increase in the number of samples. The approximate kernel k-means algorithm can also be easily parallelized to further reduce the time for clustering. In [2], random projection is employed to project the data in the kernel space to a low-dimensional space, where the clustering can be performed using the k-means algorithm. Figure 2 shows some sample clusters from the Tiny data set obtained using these approximate algorithms.

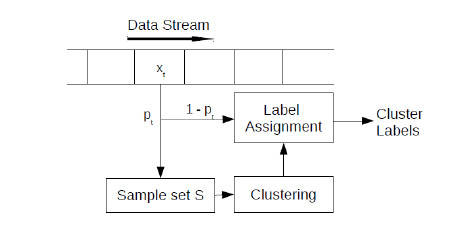

Stream Kernel Clustering

Stream data is generated continuously and is unbounded in size. Stream data clustering, particularly kernel-based clustering, is additionally challenging because it is not possible to store all the data and compute the kernel similarity matrix. In [5], we devise a sampling based algorithm which selects a subset of the stream data to construct an approximate kernel matrix. Each data point is sampled as it arrives, with probability proportional to its importance in the construction of the low-rank kernel matrix. Clustering is performed in linear time using k-means, in a low-dimensional space spanned by the eigenvectors corresponding to the top eigenvalues of the kernel matrix constructed from the sampled points. Points which are not sampled are assigned labels in real-time by selecting the cluster center closest to them (Figure 3). We evaluated this algorithm on the Twitter stream, which was clustered with high accuracy at a speed of about 1 Mbps. Figure 4 shows sample tweets from the cluster representing the #HTML hashtag.

Relevant Publication(s)

[1] R. Chitta, R. Jin, T. C. Havens, and A. K. Jain, "Approximate Kernel k-means: solution to Large Scale Kernel Clustering", KDD, San Diego, CA, August 21-24, 2011.

[2] T. C. Havens, R. Chitta, A. K. Jain, and R. Jin, "Speedup of Fuzzy and Possibilistic Kernel c-Means for Large-Scale Clustering", Proc. IEEE Int. Conf. Fuzzy Systems, Taipei, Taiwan, June 27-30, 2011.

[3] R. Chitta, R. Jin, and A. K. Jain, "Efficient Kernel Clustering using Random Fourier Features", ICDM, Brussels, Belgium, Dec. 10-13, 2012.

[4] R. Chitta, R. Jin, and A. K. Jain, "Scalable Kernel Clustering: Approximate Kernel k-means", arXiv preprint arXiv:1402.3849, 2014.

[5] R. Chitta, R. Jin, and A. K. Jain, "Stream Clustering: Efficient Kernel-Based Approximation using Importance Sampling", (Under Review).

Automated Cancer Detection and Grading

Learn more

Automated Cancer Detection and Grading

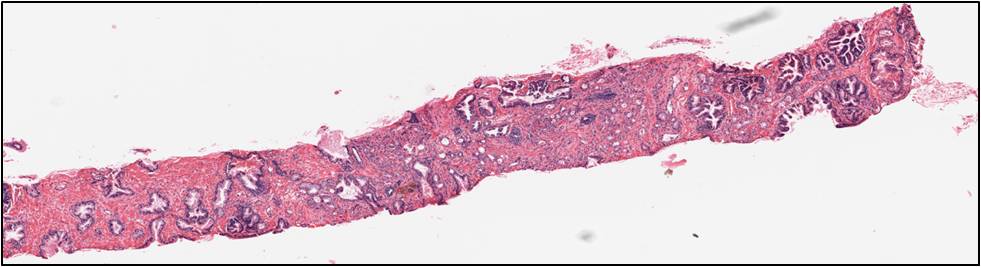

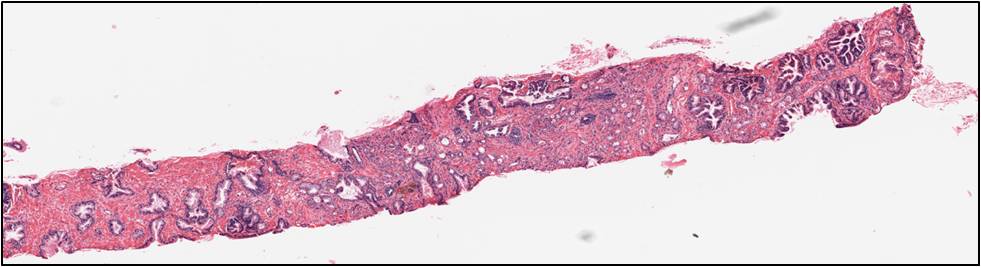

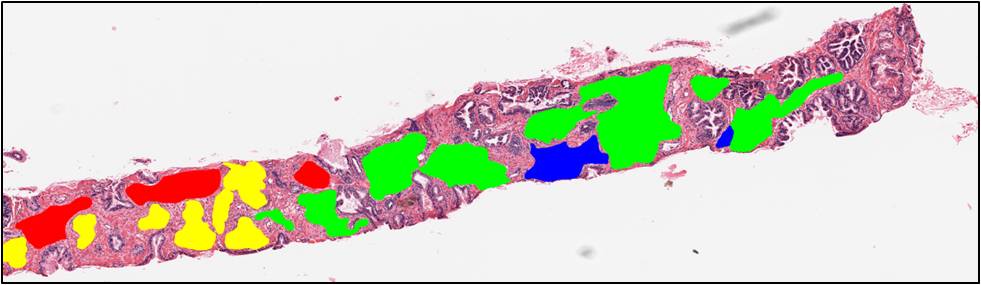

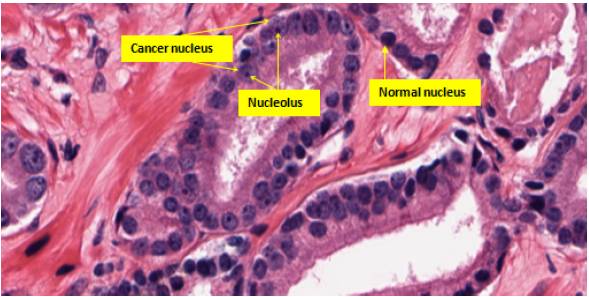

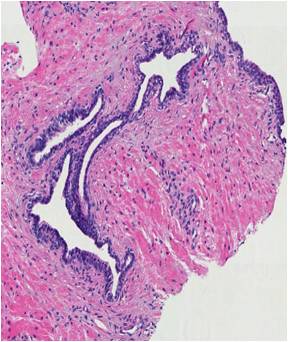

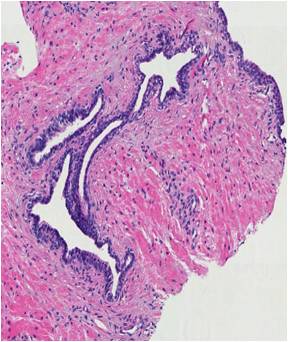

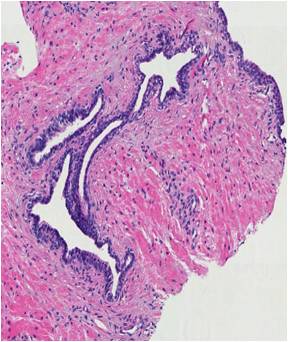

A computer aided system for histological prostate cancer diagnosis can assist pathologists by automating two stages. The first is to locate cancer regions in the large digitized tissue biopsy and the second is to assign grades to those regions. In this project we address both of the stages. In the first stage, besides well-known textural features, we use cytological features which are not utilized in the Gleason grading method to detect cancer regions in a whole slide tissue image at 20x magnification (the size is approximately 5,000x20,000 pixels). One of the most important cytological features is the presence of cancer nuclei (nuclei with prominent nucleoli) in the cancer regions. Both cytological and textural features are extracted from the tissue image and are combined to detect cancer regions.

In the second stage, motivated by the Gleason grading method, we aim at classifying a pre-selected region of interest (ROI) into one of the three common categories: benign, grade 3 and grade 4. In the benign ROIs, glands are well-defined with lumina regions in the center, circumscribed by epithelial nuclei and epithelial cytoplasm. In grade 3 ROIs, there appear small, circular glands with thin boundary. Finally, glands start to fuse with each other in grade 4 ROIs. As a result, glands in grade 4 regions do not maintain the well-defined structures as in benign regions. We propose a segmentation-based approach in which we (i) first segment glands from the tissue region, (ii) extract structural features from these segmented glands and (iii) classify the ROI into one of the three classes.

Relevant Publication(s)

K. Nguyen, B. Sabata, and A.K. Jain, "Prostate Cancer Grading: Gland Segmentation and Structural Features", Pattern Recognition Letters, Vol. 33, No. 7, pp. 951-961, May 2012.

K. Nguyen, A. Sarkar, and A. K. Jain, "Structure and Context in Prostatic Gland Segmentation and Classification", In: N. Ayache et al. (eds.) MICCAI 2012, LNCS, Vol. 7510, pp. 115-123, Springer, Heidelberg, 2012.

K. Nguyen, A. K. Jain, and B. Sabata, "Prostate Cancer Detection: Fusion of Cytological and Textural Features", Journal of Pathology Informatics, Vol. 2, No. 2 [p. 3], 2011.

K. Nguyen, A. K. Jain, and B. Sabata, "Prostate Cancer Detection: Fusion of Cytological and Textural Features", MICCAI - Workshop on Histopathology Image Analysis, Toronto, Canada, Sep. 18-22, 2011.

K. Nguyen, A.K. Jain and R. Allen, "Automated Gland Segmentation and Classification for Gleason Grading of Prostate Tissue Images", ICPR, Istanbul Turkey, August 23-26, 2010.

Biometric Template Security

Learn more

Biometric Template Security

With the large scale deployment of biometric systems, a number of security and privacy issues are arising. It was shown that the stored fingerprint minutiae templates can be used to reconstruct the whole fingerprint and thus spoof fingerprints can be easily created to compromise the systems. Further, cross-linking of databases would be possible leading to privacy compromise. To curb such vulnerabilities of a biometric system, a number of template protection techniques are designed that either allow template revocation (called feature transformation based techniques) or keep the system secure even if the stored information is leaked (called biometric cryptosystems). Still significant advances are being made to design techniques that satisfy requirements of revocability as well as biometric cryptosystems and still have high matching performance.

Relevant Publication(s)

A. Nagar, K. Nandakumar, and A. K. Jain, "Multibiometric Cryptosystems based on Feature Level Fusion", IEEE Transactions on Information Forensics and Security, Vol. 7, No. 1, pp. 255-268, February 2012.

A. K. Jain and K. Nandakumar, "Biometric Authentication: System Security and User Privacy", IEEE Computer, November, 2012.

A. Nagar, K. Nandakumar, and A. K. Jain, "Multibiometric Cryptosystems", MSU Technical Report, MSU-CSE-11-4, 2011.

J. Feng and A. K. Jain, "Fingerprint Reconstruction: From Minutiae to Phase", IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 33, No. 2, pp. 209-223, February, 2011.

A. Nagar, K. Nandakumar, and A. K. Jain, "A hybrid biometric cryptosystem for securing fingerprint minutiae templates", Pattern Recognition Letters, Vol. 31, No. 8, pp. 733-741, June 2010 .

A. Nagar, K. Nandakumar, A. K. Jain, "Biometric Template Transformation: A Security Analysis",Proc. of SPIE, Electronic Imaging, Media Forensics and Security XII, San Jose, Jan. 2010.

J. Feng, and A. K. Jain, "FM Model Based Fingerprint Reconstruction from Minutiae Template," Proc. International Conference on Biometrics (ICB), June, 2009.

A. K. Jain, K. Nandakumar, and A. Nagar, "Biometric Template Security," EURASIP Journal on Advances in Signal Processing, Special Issue on Advanced Signal Processing and Pattern Recognition Methods for Biometrics, January 2008.

K. Nandakumar, A. K. Jain, and S. Pankanti, "Fingerprint-based Fuzzy Vault: Implementation and Performance," IEEE Transactions on Information Forensics and Security, Vol. 2, No. 4, pp. 744-757, December 2007.

K. Nandakumar, A. Nagar, and A. K. Jain," Hardening Fingerprint-based Fuzzy Vault Using Password", Proc. 2nd Intl. Conf. on Biometrics (ICB), pp. 927 - 937, Seoul, South Korea, August 2007.

A. Nagar, K. Nandakumar, and A. K. Jain, "Securing Fingerprint Template: Fuzzy Vault with Minutiae Descriptors", Proc. ICPR, Dec., 2008.

Extended Features in Fingerprints

Learn more

Extended Features in Fingerprints

Automatic Fingerprint Identification Systems (AFIS) have for a long time used only minutiae for fingerprint matching. But minutiae are only a small subset of fingerprint details routinely used by latent examiners for fingerprint matching. This has generated a lot of interest in extended feature set (EFS) with the aim of narrowing down the gap between the performance of AFIS and latent examiners. Typical extended features include dots, incipient ridges, pores, and ridge edge features, etc. See Fig. 1. Although some researchers reported the effectiveness of extended features in improving automatic fingerprint recognition accuracy by using their own private fingerprint databases and their own in-house fingerprint matchers, it is still not clear if and how much extended features can benefit AFIS when low quality fingerprint images (such as ink and latent fingerprints) are used and when commercial off-the-shelf (COTS) fingerprint matchers are used as the baseline. The goals of this project are: (i) design automatic extraction and matching algorithms for extended features (particularly, pores, dots, incipient ridges, and ridge edge protrusions), (ii) determine the utility of extended features in matching low quality fingerprints and latent fingerprints, (iii) explore how to incorporate extended features into existing AFIS, and (iv) make recommendations regarding the effectiveness of extended features. Fig. 2 shows the pores extracted by using our extraction algorithm in an example fingerprint image, and Fig. 3 shows the mated pores found by using our matching algorithm in a latent and its exemplar fingerprint. According to our preliminary results (see Fig. 4) of matching 131 partial inked fingerprint images against 4,180 rolled inked fingerprint images (all images are of 1,000 ppi), we observed that i) minutiae matchers usually work very well when there is a sufficient number of minutiae, and ii) the contribution of extended features is more effective for fingerprints which have few minutiae or low minutiae match scores.

Relevant Publication(s)

Q. Zhao, J. Feng, and A. K. Jain, "Latent Fingerprint Matching: Utility of Level 3 Features", MSU Technical Report. MSU-CSE-10-14, Aug. 2010.

Q. Zhao and A. K. Jain, "On the Utility of Extended Fingerprint Features:A Study on Pores", CVPR, Workshop on Biometrics, San Francisco, June 18, 2010.

Project: Face Recognition at a Distance

Learn more

Project: Face Recognition at a Distance

Face recognition systems typically have a rather short operating distance with standoff (distance between the camera and the subject) limited to 1~2 meters. When these systems are used to capture face images at a larger distance (5 m), the resulting images contain only a small number of pixels on the face region, resulting in a degradation in face recognition performance. To address this problem, we propose dual camera system consisting of PTZ and static cameras to acquire high resolution face images up to a distance of 12 meters. The proposed camera system utilizes the coaxial and concentric configuration between the static and PTZ cameras to achieve distance invariance PTZ camera control. We also use a linear prediction model and camera control scheme to mitigate delays in image processing and mechanical camera motion. The proposed system has a larger standoff in face image acquisition and effectiveness in face recognition test.

Relevant Publication(s)

H. Maeng, S. Liao, D. Kang, S.-W. Lee, and A. K. Jain, "Nighttime Face Recognition at Long Distance: Cross-distance and Cross-spectral Matching", ACCV, Daejeon, Korea, Nov. 5-9, 2012.

H. Maeng, H.-C. Choi, U. Park, S.-W. Lee, and A. K. Jain, "NFRAD: Near-Infrared Face Recognition at a Distance", IJCB, Washington, DC, Oct. 11-13, 2011.

H.-C. Choi, U. Park, and A. K. Jain, "PTZ Camera Assisted Face Acquisition, Tracking & Recognition," Biometrics: Theory, Applications and Systems (BTAS), 2010.

Fingerprint Alteration

Learn more

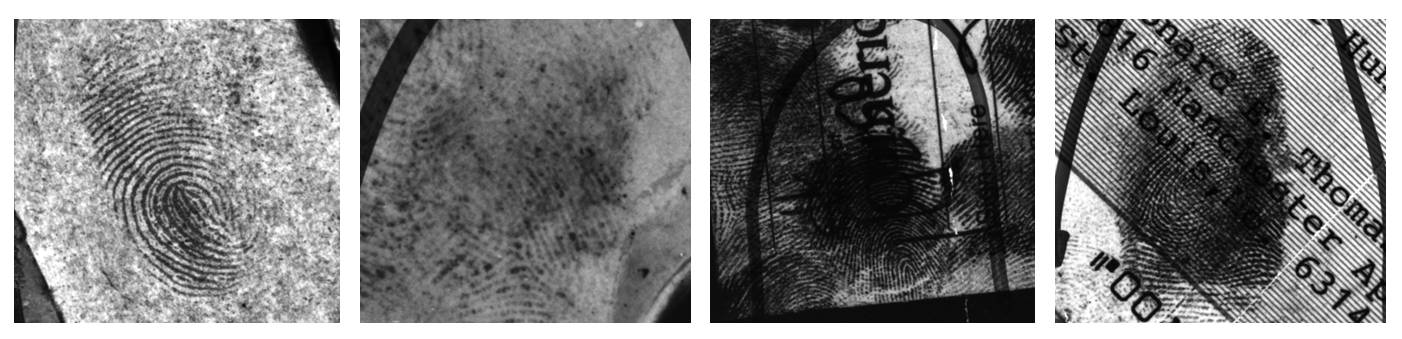

Fingerprint Alteration

The widespread deployment of Automated Fingerprint Identification Systems (AFIS) in law enforcement and border control applications has heightened the need for ensuring that these systems are not compromised. While several issues related to fingerprint system security have been investigated, including the use of fake fingerprints for masquerading identity, the problem of fingerprint alteration or obfuscation has received very little attention. Fingerprint obfuscation refers to the deliberate alteration of the fingerprint pattern by an individual for the purpose of masking his identity. Several cases of fingerprint obfuscation have been reported in the press (see Fig. 1). Fingerprint image quality assessment software (e.g. NFIQ) cannot always detect altered fingerprints since the implicit image quality due to alteration may not change significantly. The goals of this research include: (i) analyzing altered fingerprints based on a large altered fingerprint database provided to us by a law enforcement agency, (ii) detecting altered fingerprints automatically at a very low false positive rate (i.e., a natural/unaltered fingerprint is misclassified as altered fingerprint), (iii) restoring altered fingerprints in possible cases, and (iv) matching altered fingerprints to their unaltered mates in the database.

Relevant Publication(s)

S. Yoon, J. Feng, and A. K. Jain, "Altered Fingerprints: Analysis and Detection", IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 34, No. 3, pp. 451-464, March 2012.

S. Yoon, Q. Zhao, and A. K. Jain, "On Matching Altered Fingerprints", ICB, New Delhi, India, March 29-April 1, 2012.

A. K. Jain and S. Yoon, "Automatic Detection of Altered Fingerprints", IEEE Computer, Vol. 45, No. 1, pp. 79-82, January, 2012.

J. Feng, A. K. Jain and A. Ross, "Detecting Altered Fingerprints", ICPR, Istanbul, Turkey, August 23-26, 2010.

Fingerprint, Face, Dental Biometrics, Online Signature

Learn more

Fingerprint, Face, Dental Biometrics, Online Signature

Fingerprint

Extended Feature Set for Fingerprint Matching

There are fundamental differences in the way fingerprints are compared by forensic experts and current Automatic Fingerprint Systems (AFIS). For example, AFIS systems focus mainly on the quantitative measures of fingerprint minutiae (ridge ending and bifurcation points), while latent experts often analyze details of intrinsic ridge characteristics and relational information. This alternate process includes examination of an extended feature set of minutiae shape, dots, incipient ridges, local ridge quality, ridge tracing, etc. However, most of the features used by latent experts have not even been quantitatively defined for AFIS matching. This project aims to develop algorithms that automatically extract and match extended features.

A.K. Jain, Y. Chen and M. Demirkus, " Pores and Ridges: High Resolution Fingerprint Matching Using Level 3 Features", IEEE Transactions on Pattern Analysis and Machine Intelligence, 2006.

Y. Chen, M. Demirkus and A.K. Jain, " Pores and Ridges: Fingerprint Matching Using Level 3 Features", Proc. of International Conference on Pattern Recognition (ICPR), Vol. 4, pp. 477-480, Hong Kong, August, 2006.

Multispectral Fingerprint Matching

Multispectral (MS) fingerprint imaging systems use different wavelengths of light to illuminate the surface and the subsurface layers of the finger skin and capture the reflected light. The resulting fingerprint images and the combination of these images provide more discriminatory and robust information about the characteristics of the fingerprint than those from a TIR based optical fingerprint sensor. In that sense, we analyze the performance of different fingerprint matching algorithms on MS fingerprint images and explore new features that can be extracted from each image band.

Individuality of Fingerprints

The question of fingerprint individuality can be posed as follows: Given a query fingerprint, what is the probability that the observed number of minutiae matches with a template fingerprint is purely due to chance? An assessment of this probability can be made by estimating the variability inherent in fingerprint minutiae. We develop a compound stochastic model that is able to capture three main sources of minutiae variability in actual fingerprint databases. The compound stochastic models are used to synthesize realizations of minutiae matches from which numerical estimates of fingerprint individuality can be derived. Experiments on the FVC2002DB1 and IBMHURSLEY databases show that the probability of obtaining a 12 minutiae match purely due to chance is 1.6×10−5 when the number of minutiae in the query and template fingerprints are both 46.

Y. Zhu, S. C. Dass, and Anil K. Jain, " Compound Stochastic Models for Fingerprint Individuality", Proc. of International Conference on Pattern Recognition (ICPR), Vol. 3, pp. 532-535, Hong Kong, August, 2006.

S. C. Dass, Y. Zhu and Anil K. Jain, " Statistical models for assessing the individuality of fingerprints", Fourth IEEE workshop on Automatic Identification Advanced Technologies, pages 1-7,2005.

S. Pankanti, S. Prabhakar, and A. K. Jain, "On the Individuality of Fingerprints", IEEE Transactions on PAMI, Vol. 24, No. 8, pp. 1010-1025, 2002. A shorter version also appears in Fingerprint Whorld, pp. 150-159, July 2002.

Fingerprint Mosaicking

It has been observed that the reduced contact area offered by solid-state fingerprint sensors do not provide sufficient information (e.g., minutiae) for high accuracy user verification. Further, multiple impressions of the same finger acquired by these sensors, may have only a small region of overlap thereby affecting the matching performance of the verification system. To deal with this problem, we suggest a fingerprint mosaicking scheme that constructs a composite fingerprint image using multiple impressions. In the proposed algorithm, two impressions of a finger are initially aligned using the corresponding minutiae points. This alignment is used by the well-known iterative closest point algorithm (ICP) to compute a transformation matrix that defines the spatial relationship between the two impressions. The transformation matrix is used in two ways: (a) the two impressions are stitched together to generate a composite image. Minutiae points are then detected in this composite image. (b) the minutia maps obtained from each of the individual impressions are integrated to create a larger minutia map. The availability of a composite template improves the performance of the fingerprint matching system as is demonstrated in our experiments.

A. K. Jain and A. Ross, " Fingerprint Mosaicking", Proc. International Conference on Acoustic Speech and Signal Processing (ICASSP), Orlando, Florida, May 13-17, 2002.

Hybrid Fingerprint Matcher

A fingerprint matcher that uses both minutiae and texture information present in fingerprints has been developed. A set of 8 Gabor filters are used to extract texture information inherent in fingerprints. Minutiae and/or core information is used to align two fingerprints. The hybrid matcher is shown to exhibit superior matching performance compared to a purely minutiae-based matcher.

A. Ross, A. K. Jain, and J. Reisman, " A Hybrid Fingerprint Matcher", Pattern Recognition, Vol. 36, No. 7, pp. 1661-1673, 2003.

A. Ross, J. Reisman and A. K. Jain, " Fingerprint Matching Using Feature Space Correlation", Proc. of Post-ECCV Workshop on Biometric Authentication, Copenhagen, Denmark, June 1, 2002.

A. K. Jain, A. Ross, and S. Prabhakar, " Fingerprint Matching Using Minutiae and Texture Features", Proc. International Conference on Image Processing (ICIP), Greece, October 7-10, 2001.

A. K. Jain, S. Prabhakar, L. Hong and S. Pankanti "Filterbank-based Fingerprint Matching", IEEE Transactions on Image Processing, Vol. 9, No.5, pp. 846-859, May 2000.

Fingerprint Classification

Fingerprint classification can provide an important indexing mechanism in a fingerprint database. An accurate and consistent classification can greatly reduce fingerprint matching time for large databases. In this paper, we present a fingerprint classification algorithm which is able to achieve an accuracy better than previously reported in the literature. We classify fingerprints into five categories: whorl, right loop, left loop, arch, and tented arch. The algorithm separates the number of ridges present in four directions (0, 45, 90, and 135 degrees) by filtering the central part of a fingerprint with a bank of Gabor filters. This information is quantized to generate a FingerCode which is used for classification. Our classification is based on a two-stage classifier which uses a K-nearest neighbor classifier in the first stage and a set of neural networks in the second stage. The classifier is tested on 4,000 images in the NIST-4 database. For the five-class problem, classification accuracy of 90% is achieved. For the four-class problem (arch and tented arch combined into one class), we are able to achieve a classification accuracy of 94.8%. By incorporating a reject option, the classification accuracy can be increased to 96% for the five-class classification and to 97.8% for the four-class classification when 30.8% of the images are rejected.

S. Dass and A. K. Jain," Fingerprint Classification Using Orientation Field Flow Curves", Proc. of Indian Conference on Computer Vision, Graphics and Image Processing, (Kolkata), pp. 650-655, December 2004.

A. K. Jain and S. Minut, "Hierarchical Kernel Fitting for Fingerprint Classification and Alignment", Proc. of International Conference on Pattern Recognition, Quebec City, August 11-15, 2002.

A. K. Jain, S. Prabhakar and L. Hong, " A Multichannel Approach to Fingerprint Classification", IEEE Transactions on PAMI, Vol.21, No.4, pp. 348-359, April 1999.

Distinguishing Identical Twins Using Fingerprints

Automatic identification methods based on physical biometric characteristics such as fingerprint or iris can provide positive identification with a very high accuracy. However, the biometrics-based methods assume that the physical characteristics of an individual (as captured by a sensor) used for identification are distinctive. Identical twins have the closest genetics-based relationship and, therefore, the maximum similarity between fingerprints is expected to be found among identical twins. We show that a state-of-the-art automatic fingerprint identification system can successfully distinguish identical twins though with a slightly lower accuracy than nontwins.

A. K. Jain, S. Prabhakar, and S. Pankanti, " On The Similarity of Identical Twin Fingerprints", Pattern Recognition, Vol. 35, No. 11, pp. 2653-2663, 2002.

Combination of Fingerprint Matchers

Different fingerprint matching algorithms may use different type of information extracted from the input fingerprints and hence complement each other. Integration of fingerprint matching algorithms is a viable way to improve the performance of a fingerprint verification system. In this paper, we use logistic transform to integrate the output scores from two different fingerprint matching algorithms. Each set of four parameters for a specified false acceptance rate (FAR) is obtained through supervised learning: the four parameters are adjusted so that the false rejection rate (FRR) is minimized for a given FAR. This results in optimizing a function with an unknown analytical form; hence the commonly used gradient-descent learning with an artificial neural network is not applicable. The optimization is solved by Brent's efficient numerical algorithm without the use of derivatives. Experiments conducted on a large fingerprint data set confirme the effectiveness of the proposed integration scheme.

A. K. Jain, S. Prabhakar and S. Chen, " Combining Multiple Matchers for a High Security Fingerprint Verification System", Pattern Recognition Letters, Vol 20, No. 11-13, pp. 1371-1379, 1999.

S. Prabhakar and A. K. Jain, " Decision-level Fusion in Fingerprint Verification" Pattern Recognition, Vol. 35, No. 4, pp. 861-874, 2002.

Face

3D Face Recognition

The performance of face recognition systems that use two-dimensional (2D) images is dependent on consistent conditions such as lighting, pose, and facial expression. A multi-view face recognition system is being developed, which utilizes three-dimensional (3D) information about the face, along with the facial texture, to make the system more robust to those variations. A procedure is presented for constructing a database of 3D face models and matching this database to 2.5D face scans which are captured from different views. 2.5D is a simplified 3D (x, y, z) surface representation that contains at most one depth value (z direction) for every point in the (x, y) plane. A robust similarity metric is defined for matching. To address the non-rigid facial movement, such as expressions, we present a facial surface modeling and matching scheme to match 2.5D test scans in the presence of both non-rigid deformations and large pose changes (multiview) to a neutral expression 3D face model. A geodesic-based resampling approach is applied to extract landmarks for modeling facial surface deformations. We are able to synthesize the deformation learned from a small group of subjects (control group) onto a 3D neutral model (not in the control group), resulting in a deformed template. A personspecific (3D) deformable model is built for each subject in the gallery w.r.t. the control group by combining the templates with synthesized deformations. By fitting this generative deformable model to a test scan, the proposed approach is able to handle expressions and large pose changes simultaneously.

X. Lu and A. K. Jain, " Deformation Modeling for Robust 3D Face Matching", Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR2006), Vol. 2, pp. 1377 - 1383, New York, NY, Jun. 2006.

X. Lu and A.K. Jain, " Integrating range and texture information for 3D face recognition", Proc. of WACV (Workshop on Applications of Computer Vision), pp. 156-163, Breckenridge, Colorado, January 2005.

X. Lu and A.K. Jain, " Deformation Analysis for 3D Face Matching", Proc. of WACV (Workshop on Applications of Computer Vision), pp. 99-104, Breckenridge, Colorado, January 2005.

X. Lu, D. Colbry and A. K. Jain, " Three-Dimensional Model Based Face Recognition", Proc. International Conference on Pattern Recognition (ICPR), vol. I, pp. 362-366, Cambridge, UK, August 2004.

X. Lu, D. Colbry and A. K. Jain, " Matching 2.5D Scans for Face Recognition", Proc. International Conference on Biometric Authentication (ICBA) , pp. 30-36, Hong Kong, July 2004.

X. Lu, R. Hsu, A. Jain, B. Kamgar-Parsi and B. Kamgar-Parsi, Face Recognition with 3D Model-Based Synthesis, Proc. International Conference on Biometric Authentication (ICBA), pp. 139-146, Hong Kong, July 2004.

Face Recognition in Video

Face recognition in video has gained wide attention as a covert method for surveillance to enhance security in a variety of application domains (e.g., airports). A video contains temporal information as well as multiple instances of a face, so it is expected to lead to better face recognition performance compared to still face images. However, faces appearing in a video have substantial variations in pose and lighting. These pose and lighting variations can be effectively modeled using 3D face models. Combining the advantages of 2D video and 3D face models, we propose a face recognition system that identifies faces in a video. The system utilizes the rich information in a video and overcomes the pose and lighting variations using 3D face model. The 3D face models are obtained from a 3D range sensor and stereographic reconstruction process. Experimental results have shown that both 3D face models provide better face recognition performance by compensating pose and lighting variations.

U. Park and A.K.Jain, " 3D Face Reconstruction from Stereo Video", Proc. First International Workshop on Video Processing for Security (VP4S-06) in Third Canadian Conference on Computer and Robot Vision (CRV06), June 7-9, Quebec City, Canada, 2006.

U. Park, H. Chen and A. K. Jain, " 3D Model-assisted Face Recognition in Video", Proc. of 2nd Workshop on Face Processing in Video, in conjuction with AI/GI/CRV05, pp. 322-329, Victoria, British Columbia, Canada, May 2005.

Face Detection

Human face detection is often the first step in applications such as video surveillance, human computer interface, face recognition, and image database management. We propose a face detection algorithm for color images in the presence of varying lighting conditions as well as complex backgrounds. Our method detects skin regions over the entire image, and then generates face candidates based on the spatial arrangement of these skin patches. The algorithm constructs eye, mouth, and boundary maps for verifying each face candidate. Experimental results demonstrate successful detection over a wide variety of facial variations in color, position, scale, rotation, pose, and expression from several photo collections.

R.-L. Hsu, Mohamed Abdel-Mottaleb and A. K. Jain, "Face Detection in Color Images", IEEE Transactions on PAMI, vol. 24, no.5, pp. 696-706, May 2002.

R.-L. Hsu, M. Abdel-Mottaleb, and A. K. Jain, " Face detection in color images", Proc. International Conference on Image Processing (ICIP) , Greece, October 7-10, 2001.

Sarat C. Dass and A. K. Jain, "Markov Face Models", The Eighth IEEE International Conference on Computer Vision (ICCV), Vancouver, Canada, July 9-12, 2001.

Face Modeling

3D Human face models have been widely used in applications such as face recognition, facial expression recognition, human action recognition, head tracking, facial animation, video compression/coding, and augmented reality. Modeling human faces provides a potential solution to the variations encountered on human face images. We propose a method of modeling human faces based on a generic face model (a triangular mesh model) and individual facial measurements containing both shape and texture information. The modeling method adapts a generic face model to the given facial features, extracted from registered range and color images, in a global to local fashion. It iteratively moves the vertices of the mesh model to smoothen the non-feature areas, and uses the 2.5D active contours to refine feature boundaries. The resultant face model has been shown to be visually similar to the true face. Initial results show that the constructed model is quite useful for recognizing profile views. sensors.

R.-L. Hsu and A. K. Jain, " Semantic face matching", Proc. IEEE Int'l Conf. Multimedia and Expo (ICME) , Lausanne, Switzerland, Aug. 2002.

R.-L. Hsu and A. K. Jain, " Face modeling for recognition", Proc. International Conference on Image Processing (ICIP) , Greece, October 7-10, 2001.

Combination of Face Matchers

Current two-dimensional face recognition approaches can obtain a good performance only under constrained environments. However, in real applications, face appearance changes significantly due to different illumination, pose, and expression conditions. Face recognizers based on different representations of the input face images have different sensitivity to these variations. Therefore, a combination of several face classifiers which can integrate the complementary information should lead to improved classification accuracy. We use the sum rule and RBF-based integration strategies to combine three commonly used face classifiers based on PCA, ICA and LDA representations. Experiments conducted on a face database containing 206 subjects (2,060 face images) show that the proposed classifier combination approaches outperform individual classifiers.

X. Lu, Y. Wang and A. K. Jain, " Combining Classifiers for Face Recognition", Proc. ICME 2003, IEEE International Conference on Multimedia & Expo, vol. III, pp. 13-16, Baltimore, MD, July 6-9, 2003.

Dental Biometrics

Dental Biometrics

Dental Biometrics

The goal of forensic dentistry is to identify people based on their dental records, mainly as radiograph images. In this paper we attempt to set forth the foundations of a biometric system for semi-automatic processing and matching of dental images, with the final goal of human identification. Given a dental record, usually as a postmortem (PM) radiograph, we need to search the database of antemortem (AM) radiographs to determine the identity of the person associated with the PM image.We use a semi-automatic method to extract shapes of the teeth from the AM and PM radiographs, and find the affine transform that best fits the shapes in the PM image to those in the AM images. A ranking of matching scores is generated based on the distance between the AM and PM tooth shapes. Initial experimental results on a small database of radiographs indicate that matching dental images based on tooth shapes and their relative positions is a feasible method for human identification.

H. Chen and A. K. Jain, " Tooth Contour Extraction for Matching Dental Radiographs", Proc. ICPR 2004, vol. III, pp. 522-525, Cambridge, UK, August 2004.

G. Fahmy, D. Nassar, E. Haj-Said, H. Chen, O. Nomir, J. Zhou, R. Howell, H. H. Ammar, M. Abdel-Mottaleb and A. K. Jain, "Towards an Automated Dental Identification System (ADIS)", Proceedings of the International Conference on Biometric Authentication (ICBA), Hong Kong, July 2004.

A. K. Jain and H. Chen, " Matching of Dental X-ray Images for Human Identification ", Pattern Recognition, Vol. 37, No. 7, pp. 1519-1532, July 2004.

A. K. Jain, H. Chen and S. Minut, " Dental Biometrics: Human Identification Using Dental Radiographs", Proc. of 4th Int'l Conf. on Audio- and Video-Based Biometric Person Authentication (AVBPA), pp. 429-437, Guildford, UK, June 9-11, 2003.

Online Signature

Signature Verification

We describe a method for handwritten signature verification. The signatures are acquired using a digitizing tablet which captures both, dynamic and spatial information of the writing. After preprocessing the signature, several features are extracted. The authenticity of a writer is determined by comparing an input signature to a stored reference set (template) consisting of three signatures. The similarity between an input signature and the reference set is computed using string matching and the similarity value is compared to a threshold. Experiments on a database containing 1,232 signatures of 102 individuals show that user-specific thresholds yield better results. Several approaches to obtain the optimal threshold value from the reference set are investigated. The best result yields a false acceptance rate of 1.6% and a false reject rate of 2.8%.

A.K. Jain, Friederike D. Griess and Scott D. Connell, "On-line Signature Verification", Pattern Recognition, vol. 35, no. 12, pp. 2963--2972, Dec 2002.

Friederike D. Griess and Anil K. Jain, " On-line Signature Verification", MSU Technical Report TR00-15, 2000.

General Biometrics

Learn more

General Biometrics

Biometric Template Selection and Update

The matching accuracy of a biometrics-based authentication system is affected by the stability of the biometric data associated with the users of the system. But due to factors such as improper interaction with the sensor, variations in environmental factors and temporary alterations of the biometric trait itself, biometric data have a large intra-class variability. Hence biometric template data can be significantly different from samples obtained during authentication. To alleviate this problem, multiple templates, instead of a single template, per user can be stored in a biometric database. In this project, we study techniques to automatically select and update templates by considering system performance along with storage and computational overheads associated with multiple templates.

U. Uludag, A. Ross and A. K. Jain, " Biometric Template Selection and Update: A Case Study in Fingerprints," Pattern Recognition, Vol. 37, No. 7, pp. 1533-1542, July 2004.

A. K. Jain, U. Uludag and A. Ross, " Biometric Template Selection: A Case Study in Fingerprints", Proc. of 4th Int'l Conf. on Audio- and Video-Based Biometric Person Authentication (AVBPA), pp. 335-342, Guildford, UK, June 9-11, 2003.

Image Quality

Image quality is one of the variables that has the largest effect on biometric system accuracy and is the major cause of high false reject rates (FRR) and failure to enroll (FTE) rates. Automatic image quality measures can be developed to (i) provide real-time feedback to reduce the number of poor quality submissions to the system, (ii) predict and improve the authentication performance using quality-dependent thresholds, and (iii) generate quality-based user (or modality) specific weights for multi-biometric fusion.

Y. Chen, S. Dass and A. Jain, " Localized Iris Image Quality Using 2-D Wavelets", Proc. of International Conference on Biometrics (ICB), pp. 373-381, Hong Kong, January, 2006.

J. Fierrez-Aguilar, Y. Chen, J. Ortega-Garcia and A. K. Jain, " Incorporating image quality in multi-algorithm fingerprint verification", Proc. of International Conference on Biometrics (ICB), pp. 213-220, Hong Kong, January, 2006.

Y. Chen, S. Dass and A. Jain, " Fingerprint Quality Indices for Predicting Authentication Performance", Proc. of Audio- and Video-based Biometric Person Authentication (AVBPA), pp. 160-170, Rye Brook, NY, July 2005.

K. Nandakumar, Y. Chen, S.C. Dass and A.K. Jain, " Quality-based Score Level Fusion in Multibiometric Systems", Proc. of International Conference on Pattern Recognition (ICPR), Vol. 4, pp. 473-476, Hong Kong, August, 2006.

Sample Size Requirement for Performance Evaluation

Authentication systems based on biometric features (e.g., fingerprint impressions, iris scans, human face images, etc.) are increasingly gaining widespread use and popularity. Often, vendors and owners of these commercialbiometric systems claim impressive performance that is estimated based on some proprietary data. In such situations, there is a need to independently validate the claimed performance levels. System performance is typically evaluated by collecting biometric templates from n different subjects, and for convenience, acquiring multiple instances of the biometric for each of the n subjects. Very little work has been done in (i) constructing confidence regions based on the ROC curve for validating the claimed performance levels, and (ii) determining the required number of biometric samples needed to establish confidence regions of pre-specified width for the ROC curve. To simplify the analysis that address these two problems, several previous studies have assumed that multiple acquisitions of the biometric entity are statistically independent. This assumption is too restrictive and is generally not valid. We have developed a validation technique based on multivariate copula models for correlated biometric acquisitions. Based on the same model, we also determine the minimum number of samples required to achieve confidence bands of desired width for the ROC curve. We illustrate the estimation of the confidence bands as well as the required number of biometric samples using a fingerprint matching system that is applied on samples collected from a small population.

S. C. Dass, Y. Zhu and Anil K. Jain, " Validating a Biometric Authentication System: Sample Size Requirements", IEEE Transactions of Pattern Analysis and Machine Intelligence, pp. 1902-1913, 2006.

Heterogeneous Face Recognition

Learn more

Heterogeneous Face Recognition

![\includegraphics[height=1.5in]{projects/heterogeneous/examples/probe/nir.eps}](projects/heterogeneous/img4.png) | ![\includegraphics[height=1.5in]{projects/heterogeneous/examples/probe/thermalOrig.eps}](projects/heterogeneous/img5.png) | ![\includegraphics[height=1.5in]{projects/heterogeneous/examples/probe/sketch.eps}](projects/heterogeneous/img6.png) | ![\includegraphics[height=1.5in]{projects/heterogeneous/examples/probe/forensic.eps}](projects/heterogeneous/img7.png) |

![\includegraphics[height=1.5in]{projects/heterogeneous/examples/gal/nir.eps}](projects/heterogeneous/img8.png) | ![\includegraphics[height=1.5in]{projects/heterogeneous/examples/gal/thermalOrig.eps}](projects/heterogeneous/img9.png) | ![\includegraphics[height=1.5in]{projects/heterogeneous/examples/gal/sketch.eps}](projects/heterogeneous/img10.png) | ![\includegraphics[height=1.5in]{projects/heterogeneous/examples/gal/forensic.eps}](projects/heterogeneous/img11.png) |

| (a) | (b) | (c) | (d) |

A key asset in identification using face recognition technology is the extensive collection of face databases (photographs). The source of these databases range from ID card images (e.g. driver license and passport), visa applications (e.g. U.S. VISIT), and criminal mug shot photographs. Being populated with visible light photographs, commercial-of-the-shelf (COTS) face recognition systems (FRS) used to search these databases expects incoming visible light probe (query) photographs. However, many military and law enforcement scenarios exist where a probe face image is only available in an alternate sensing modality. Consider the following examples: (i) when acquiring a face image in environments with unfavorable illumination (such as night time) or in covert military and intelligence scenarios, infrared imaging must be used to capture a subjectв ¬ !" s face, and (ii) when no opportunity exists to acquire a face image, a forensic sketch must be drawn from a verbal description provide by a witness to a crime. In each of these scenarios the probe image will be from an alternate modality (infrared or sketch) than the images in the gallery (visible light photograph). This identification task, where the probe and gallery images are from different modalities, is called heterogeneous face recognition (HFR). Since only limited data is available on face images in no-visible spectrum (such as a database of infrared face images), accurate HFR systems are vital to enable identification in many critical situations. A COTS FRS performs poorly in heterogeneous face recognition. As a result, specialized matching systems must be developed to enable identification in these sensitive scenarios. This project aims at developing both generic solutions to multiple heterogeneous face recognition scenarios, as well as tailored solutions to specific HFR scenarios.

Relevant Publication(s)

B. Klare and A.K. Jain, "Heterogeneous Face Recognition using Kernel Prototype Similarities", IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012 (To Appear).

H. Han, B. Klare, K. Bonnen, and A. K. Jain, "Matching Composite Sketches to Face Photos: A Component-Based Approach", IEEE Transactions on Information Forensics and Security, 2012 (To Appear).

Z. Lei, S. Liao, A. K. Jain, and S. Z. Li, "Coupled Discriminant Analysis for Heterogeneous Face Recognition", IEEE Transactions on Information Forensics and Security, Vol. 7, No. 6, pp. 1707-1716, December 2012.

Z. Lei, C. Zhou, D. Yi, A. K. Jain, and S. Z. Li, "An Improved Coupled Spectral Regression for Heterogeneous Face Recognition", ICB, New Delhi, India, March 29-April 1, 2012.

A. K. Jain, and B. Klare, "Matching Forensic Sketches and Mug Shots to Apprehend Criminals", IEEE Computer, Vol.44, No. 5, pp. 94-96, May 2011.

B. Klare, Z. Li, and A. K. Jain, "Matching Forensic Sketches to Mugshot Photos", IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 33, No. 3, pp. 639-646, March, 2011.

B. Klare and A. K. Jain, "Heterogeneous Face Recognition: Matching NIR to Visible Light Images", ICPR, Istanbul, Turkey, August 23-26, 2010.

B. Klare and A. K. Jain, "Sketch to Photo Matching: A Feature-based Approach",Proc of SPIE, Biometric Technology for Human Identification VII, April 2010.

Kernel Methods for Multi-label Learning and Object Categorization

Learn more

Kernel Methods for Multi-label Learning and Object Categorization

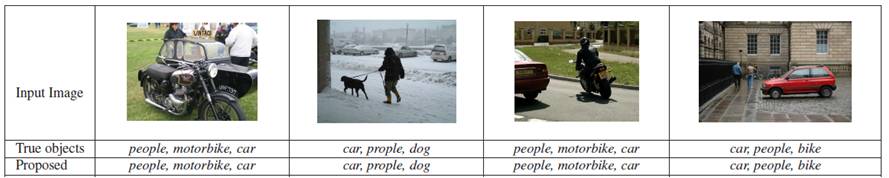

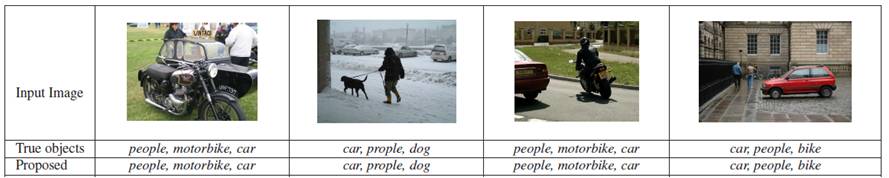

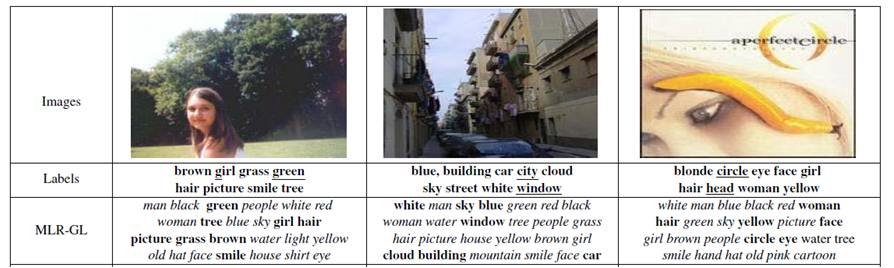

We are interested in the problem of multi-label learning with many classes, where the aim is assigning each instance to related categories (Figure 1). Our goal is to develop kernel methods for multi-label learning. Unlike the conventional approaches that implement multi-label learning as a set of binary classification problems, our goal is to develop direct approaches that would exploit relationships between the class labels, and we proposed multi-label ranking methods for this task (see references below). Moreover, since kernel learning, or more specifically Multiple Kernel Learning (MKL), has attracted considerable amount of interest in computer vision community, we proposed an efficient algorithm for multi-label multiple kernel learning (ML-MKL) – see reference below. We assume that all the classes under consideration share the same combination of kernel functions, and the objective is to find the optimal kernel combination that benefits all the classes.

We also consider a special type of multi-label learning where class assignments of training examples are incomplete. As an example, an instance whose true class assignment is (c1, c2, c3) is only assigned to class c1 when it is used as a training sample. We refer to this problem as multi-label learning with incomplete class assignment (Figure 2). We proposed a ranking based multi-label learning framework (MLR-GL) that explicitly addresses the challenge of learning from incompletely labeled data by exploiting the group lasso technique to combine the ranking errors.

Relevant Publication(s)

S. S. Bucak, R. Jin, and A. K. Jain, "Multi-label Learning with Incomplete Class Assignments," IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2011), Colorado Springs, CO, 2011.

S. S. Bucak, R. Jin, and A.K. Jain, "Multi-label Multiple Kernel Learning by Stochastic Approximation: Application to Visual Object Recognition," NIPS 2010, Vancouver, B.C., Canada, 2010.

S. S. Bucak, P. K. Mallapragada, R. Jin, and A. K. Jain, "Efficient Multi-label Ranking for Multi-class Learning: Application to Object Recognition," International conference on Computer Vision (ICCV 2009), Kyoto, Japan, 2009.

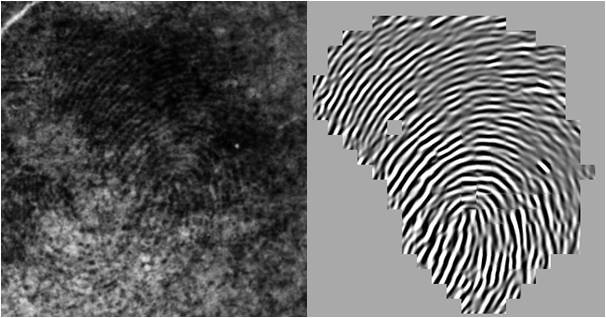

Latent Fingerprint Enhancement

Learn more

Latent Fingerprint Enhancement